The era of maintaining a fixed “#1 position” is over.

In LLMs using web search, a brand’s visibility in the answer can shift from one response to the next. Each time a query is asked, the model generates its output from a fresh sample of sources—making it harder for teams to measure and manage visibility across answer engines.

Our research set out to understand:

- Do volatility patterns repeat in ways content teams can measure and plan around?

- Does being mentioned in the answer—as if the LLM is recommending you—lead to more stable visibility than being cited alone?

- How can teams use these patterns to prioritize content updates, refreshes, and measurement windows?

Here’s how we approached the study—and what we uncovered about the relationship between AI search behavior and the impact it has on brands in search.

The Scope of Study

We analyzed 800 queries across multiple runs of LLM outputs, generating more than 45,000 citations. The query set was a balanced mix of informational and commercial intents, with an emphasis on longer, more natural questions (7+ words) to reflect how users actually search. Across sessions, we tracked key patterns of citation drift—how brand visibility rotated in and out of LLM responses.

Specifically, we measured:

- Consistency: How often a URL stayed visible across multiple days.

- Mentions vs. citations: Whether the brand was explicitly named in the answer and cited as a source, or only included as a citation.

- Reappearance: How frequently dropped URLs came back in later runs.

- Retention: How long a URL stayed in the answer once it first appeared.

Together, these measurements serve as practical reference points, and provide a baseline for interpreting brand visibility in LLMs.

The Citation vs. Mention Divide

Not all citations carry the same weight.

Being cited as a source signals that your content aligns with the query. But a brand mention in the answer goes further—it shows the model is actively bringing your brand into the conversation. That distinction creates stronger recall and a higher likelihood of recurring visibility.

Across a balanced mix of informational and commercial queries, brands earning visibility were 3x more likely to be cited than to be both cited and mentioned.

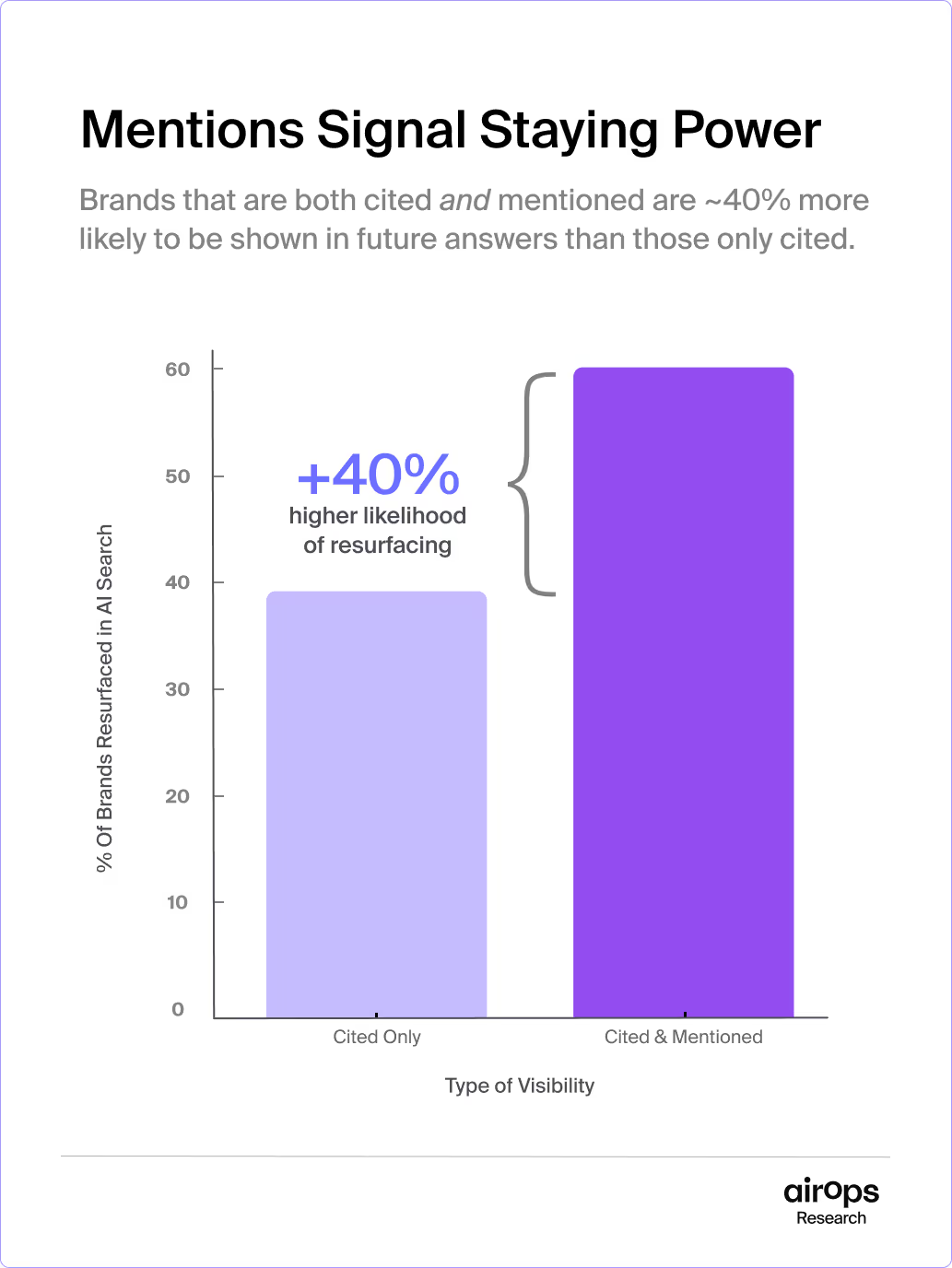

On average, 28% of LLM responses included brands that were both mentioned in the answer and cited as a source. In our analysis, we found this combination of being “mentioned and cited” increased the likelihood of resurfacing in multiple runs by 40% compared to brands that were cited-only.

Brands that were cited-only proved far more vulnerable to citation drift—appearing in one run, disappearing in the next, and occasionally resurfacing later.

This distinction highlights why mentions matter, but it also raises the bigger question: once visibility is earned—whether through a mention, a citation, or both—how long should a brand expect to retain that visibility?

Citation Drift Is the New Normal

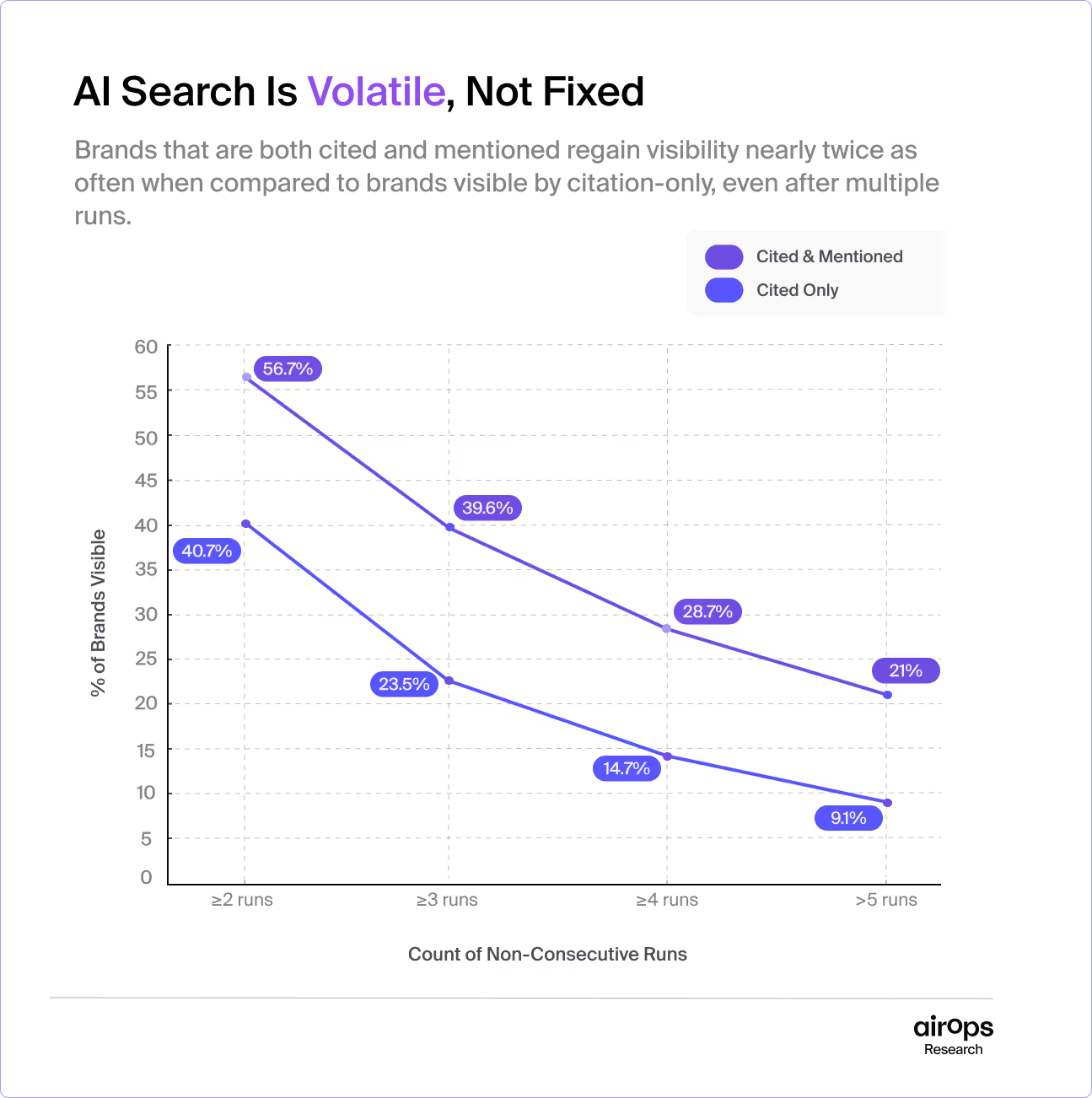

Once visibility is earned, the question becomes: how durable is it? What might first appear as inconsistent visibility is better understood as citation drift—the continual in-and-out rotation of sources as models rebalance for diversity, freshness, and intent coverage.

During our analysis, we found that around 30% of brands sustained visibility from one run to the very next—making consecutive persistence the exception, not the rule.

When we widened the scope to non-consecutive runs, we found that ~57% of brands that were both cited and mentioned resurfaced at least twice, while citation-only brands had lower rates of resurfacing.

For teams, this means volatility is predictable: the challenge isn’t eliminating drift, but learning how to measure and work within it—so you can take strategic, prioritized action.

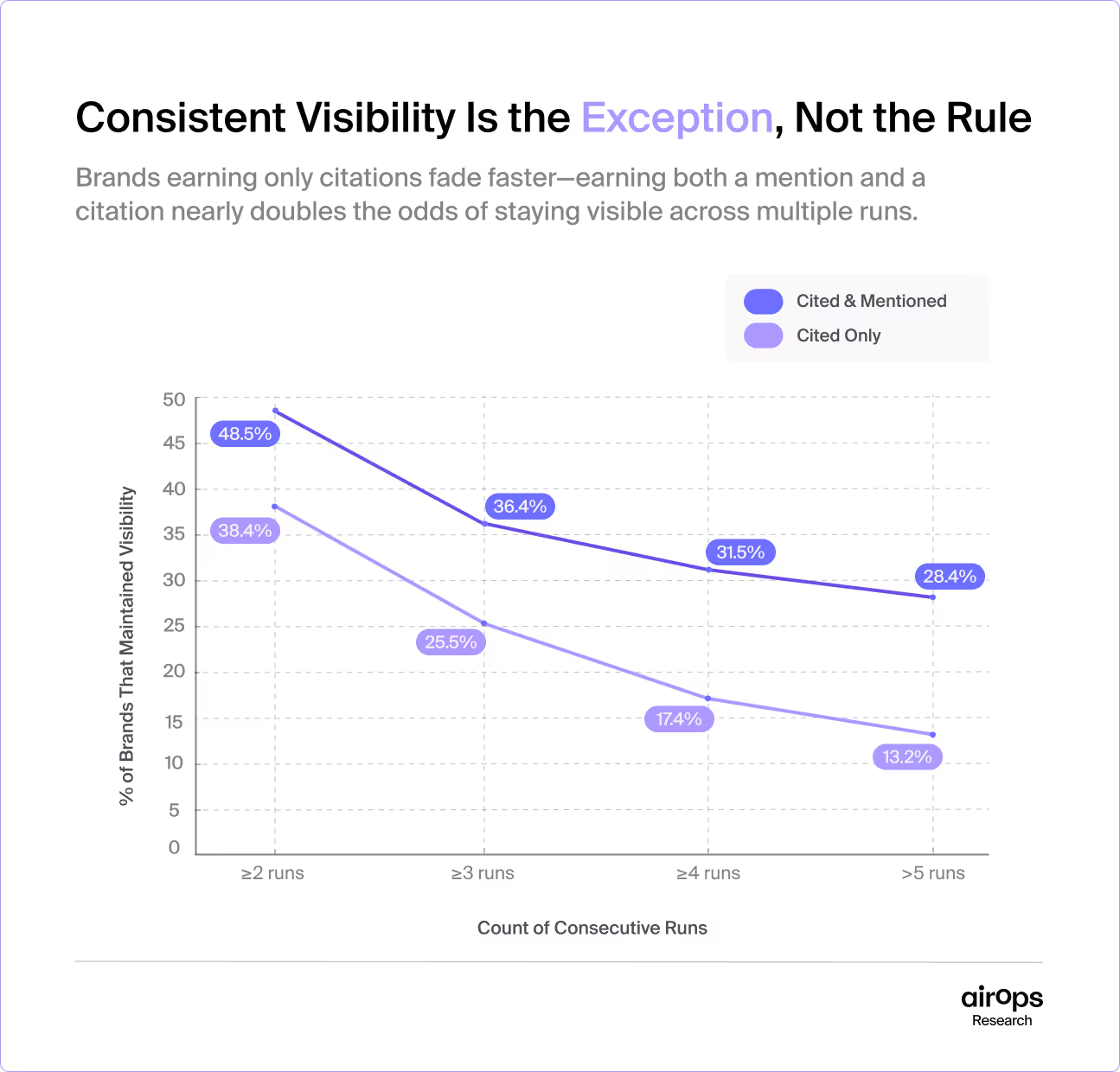

Consistent Visibility Is the Exception

Throughout our analysis, we found that some brands managed to hold steady in AI search results–but they’re the exception, not the rule. Out of more than 45,000 citations, only 1 in 5 brands had maintained visibility from the first run to the fifth.

The consistently cited pages shared common traits: structured elements like rich schema, sequential headings, scannable formatting, and concise language—all signals that help both users and models interpret content more effectively.

This observation aligns with our earlier research on content structure, which found that pages with well-organized headings were 2.8× more likely to earn citations in AI search results.

The First-Occurrence Effect

During our research, we found that a brand’s first appearance provides a reliable baseline for how long its visibility will last. Fluctuation is expected—many brands surfaced, dropped out, and then resurfaced again across multiple runs.

On average, most brands that disappeared from the answer resurfaced within two runs, showing that these drops are usually short-lived.

For teams, this is an important caution. Anchoring measurement to that first appearance sets realistic expectations, prevents overreaction to normal drift, and highlights where effort matters most.

A better approach is to adopt various measurement windows before making major changes. This buffer helps distinguish normal drift from genuine underperformance, turning volatility into a measurable signal rather than noise.

Turning Volatility Into an Advantage

AI search is volatile by design, but maintaining visibility across answer engines doesn’t have to mean guesswork. The key is to build measurement practices that account for fluctuation, identify patterns, and separate real signals from expected noise.

Here’s how teams can use volatility to their advantage:

- Anchor on first occurrences to establish a baseline for visibility.

- Track both mentions and citations to capture authority signals the model is using.

- Use structured measurement windows to avoid overreacting to short-term fluctuation.

- Refresh content regularly and monitor early traction to understand which updates sustain visibility.

Fluctuation is inevitable. The teams that will win AI search are those that turn volatility into actionable insight.

Ready to future-proof your content? Book a strategy session to learn how AirOps helps brands measure and take prioritized action in AI search.