How AI will transform Content Operations

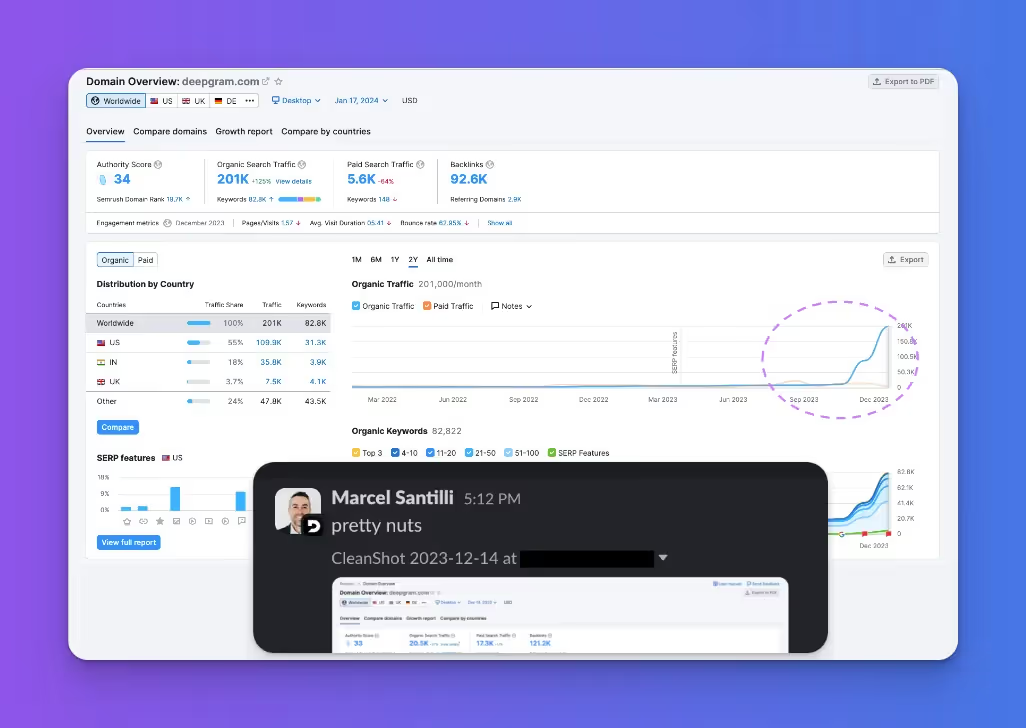

We’ve been showcasing LLM outputs to content teams across B2B SaaS, e-commerce and broader consumer businesses over the past 6 months and the feedback is clear - LLMs like GPT-4o and Claude Opus are now at a point where they have the potential to transform Content Operations. The implications for organic growth strategies and content operations are significant. However, as Google emphasizes quality and originality, the proper application of LLMs becomes increasingly crucial. Some companies like Deepgram are already seeing fantastic results with organic traffic increases as much as 36x.

Whether it’s for content research, content creation, personalization or other content operations workflows, the correct combination of first-party data, LLMs (and prompts) and human oversight can accelerate existing workflows and unlock entirely new ones.

Today we’re going to explore some of the dominant applications of LLMs in Content Operations, some of the core techniques used in applying LLMs and some of the organizational implications of this new way of working.

Some of the core use cases

Content Planning and Research

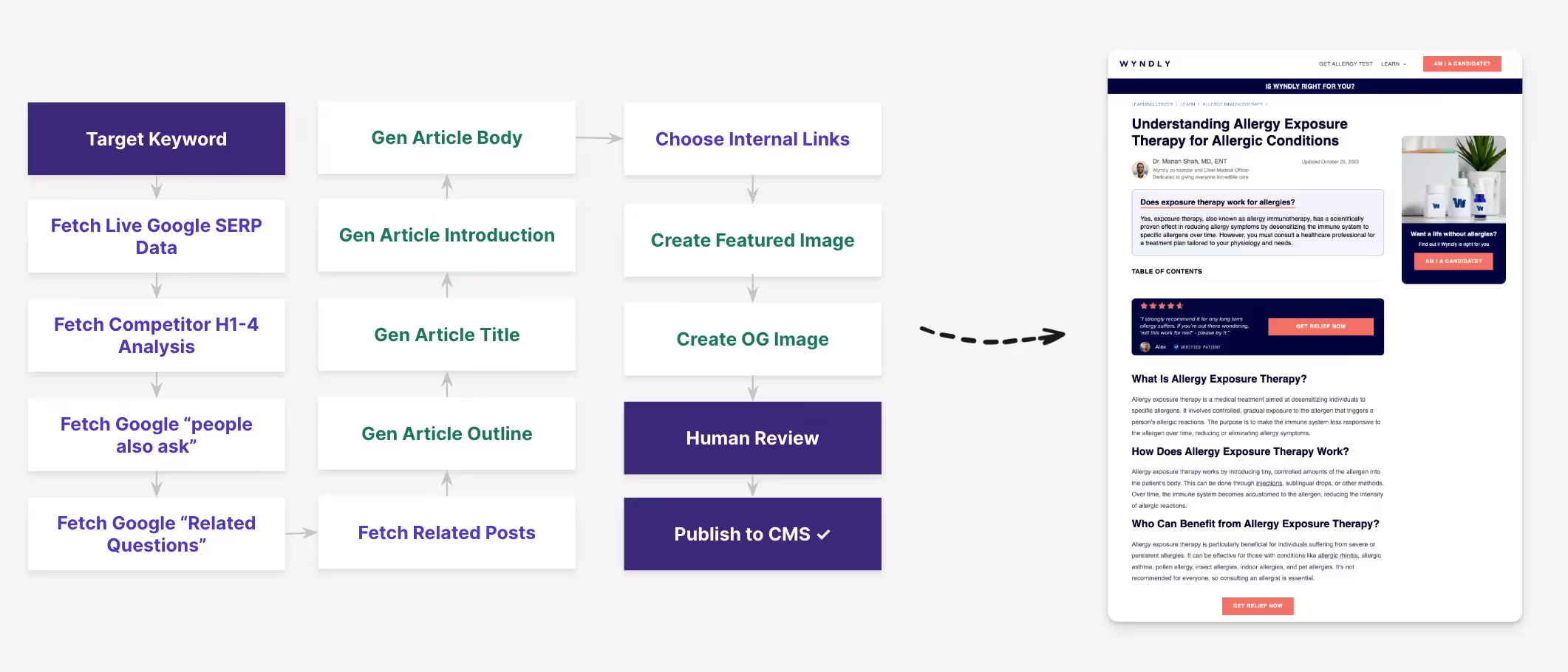

One of the most promising applications of LLMs in content operations is in the area of content planning and research. By mixing internal data, live data and the vast knowledge base inside of these models, content teams can quickly generate ideas, outlines, and even first drafts of articles, blog posts, and other content pieces.

To create something original and relevant, it's important to ground these outputs in relevant first-party data, such as brand guidelines, tone and voice samples, and existing content assets. This ensures that the generated content aligns with the brand's unique identity and messaging.

Integrating external datasets, such as keyword research from tools like Semrush, into LLM prompts ensures that the topics and angles chosen are not only relevant to the brand but also have the potential to perform well in search engines and resonate with the target audience.

Content Creation, Expansion and Repurposing

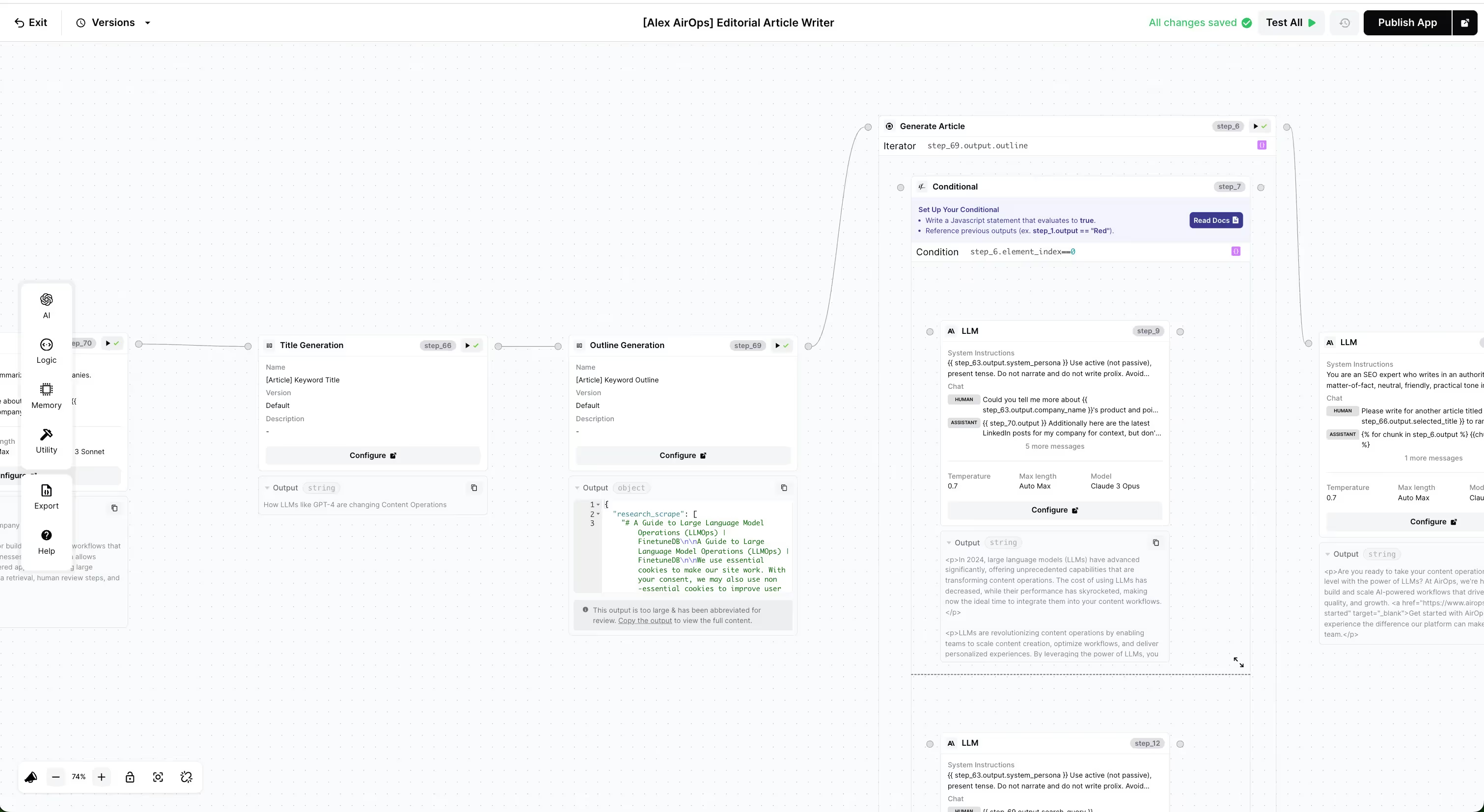

Once the planning and research phase is complete, LLMs can be used to generate high-quality content at scale. By providing the models with a well-crafted prompt that includes key information such as the target audience, desired tone and style, and specific talking points, and deep research, content teams can quickly generate drafts that can then be reviewed and refined by human editors mid-workflow or at end before go-live. AirOps makes it easy to blend human review with LLMs as part of our workflow design tools so you can decide where and when you want human oversight.

It's important to remember that the quality of the outputs is directly correlated with the quality of the inputs. Generic prompts will result in generic, ineffective content. To ensure that the generated content is relevant, original, and engaging, it's essential to apply your unique strategy, POV, and content assets to the prompts.

LLMs can also be used to expand or repurpose existing content, such as turning a blog post into a video script or a social media post into a full-fledged article. By providing the models with the original content as input, along with clear instructions on how to expand and adapt it, content teams can quickly create multiple versions of the same piece, tailored for different channels and audiences. This provides incredible leverage to existing content teams who can create a single high quality asset and repurpose it to other form factors for other channels.

Content Localization

For brands with global or local presence, content localization is a critical but often time-consuming task. LLMs can help streamline this process by automatically translating content into multiple languages, including local info and data points and adapting it to local cultural nuances and preferences. Using local api’s and internal data, the LLMs can subtly adapt content to talk to a local audience which improves conversion, ranking and authenticity.

Internal Linking and Technical SEO Updates

LLMs can also be used to improve the technical SEO aspects of a website, such as internal linking and meta descriptions. By analyzing the content of a website and identifying opportunities for internal links, the models can suggest relevant anchor text and destination pages to help improve the site's structure and navigation. AirOps makes this easy with AirOps Grid, our bulk running experience that makes it easy to run LLM workflows at scale across your existing CMS collections.

Similarly, LLMs can be used to generate meta descriptions and other on-page SEO elements, ensuring that they are optimized for search engines while still being engaging and relevant to the content itself.

Content Testing

LLMs can be used to facilitate content testing and optimization. By generating multiple versions of headlines, descriptions, and calls-to-action, content teams can quickly set up A/B tests to determine which variations resonate best with their audience. The models can also be used to analyze user engagement data and suggest content optimizations based on the insights gleaned.

As with all applications of LLMs in content operations, the key to success lies in the quality of the inputs and the strategic application of the technology. By grounding the models in relevant first-party data, integrating external datasets, and applying unique brand strategies and POVs, content teams can harness the power of LLMs to create compelling, original, and effective content at scale.

Workflow Design Best Practices

Workflow design is everything. Here are some key best practices to keep in mind:

- Retrieval is King: Retrieval augmented generation (RAG) is a game-changer. By injecting internal content, data, social proof, and other unique content into LLM prompts in real-time, you can dramatically improve the originality, tone, voice, and accuracy of your outputs.

- Human Review: Figuring out where and when humans should review outputs along the way in a generative flow is critical. Typically, reviewing titles, "pitches", outlines, and intro paragraphs (before full article generation) can make a huge difference in the quality of your final output. Over time as you become more comfortable with your workflow, you can leave more of the decision making the model.

- Prompt-Chaining: Break your prompts down into smaller, incremental instruction sets for LLMs to dramatically improve their focus. By passing in previous outputs into subsequent instructions, you can help the LLM remain consistent with previous statements and avoid repetition.

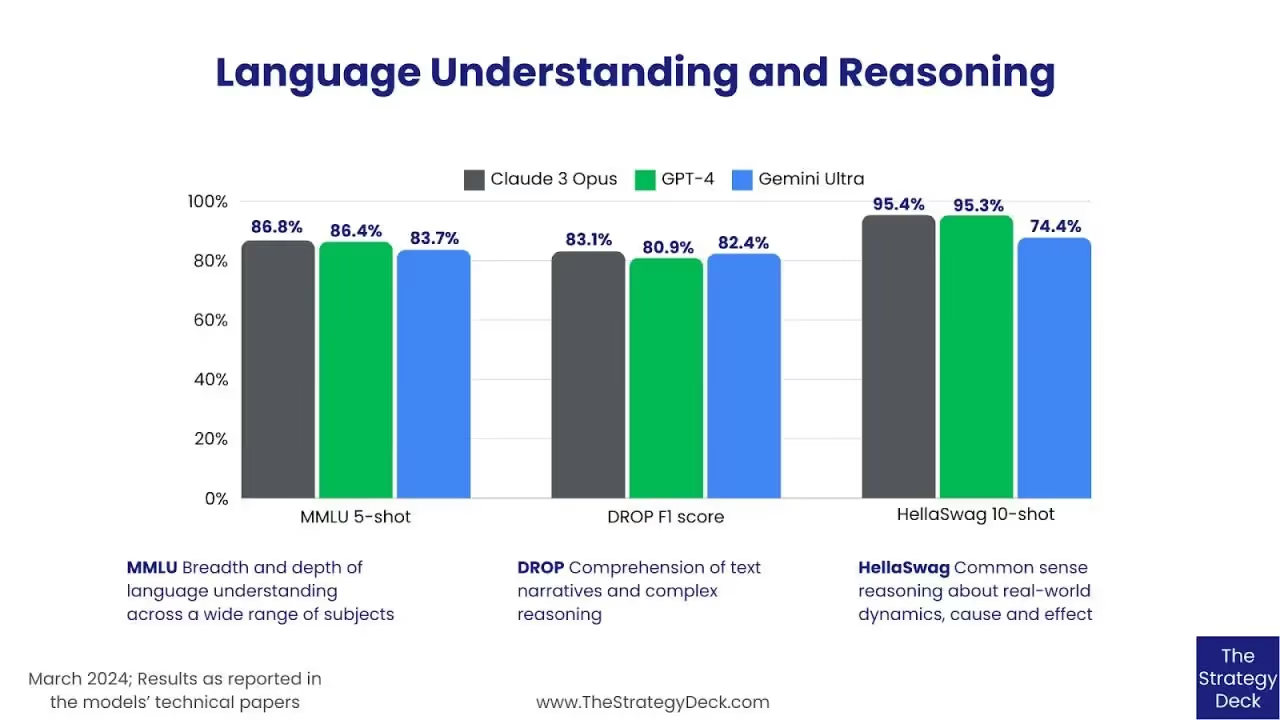

- Mix It Up: Experimentation with different models is key to getting great outputs. Our current model recommendations for content creation are Claude Opus, GPT-4o, Gemini 1.5, and Llama 3, but by the time you're reading this, there will probably be even more options to choose from. For summarization tasks, Claude Sonnet is a great go-to.

- Persona Definition: Take the time to define your LLM persona in detail to nail that tone and voice. Construct a backstory, perspective, and set of base facts for the LLM to embody as it creates content. This can take some testing to avoid the LLM over-rotating into it’s system prompt

- Iterate, Iterate, Iterate: There's always room for improvement when it comes to LLM workflows, instructions, and input data. Having a low-code platform like AirOps can be a game-changer, allowing you to make changes to your workflows in minutes.

By keeping these best practices in mind and being willing to experiment and iterate, you can create LLM-powered content workflows that are efficient, effective, and downright impressive.

Organization and Process Design

We believe that AI-first growth is going to be a huge trend. Teams that are able to embrace this early will have a huge unfair advantage. Concretely this looks like :

- Encouraging experimentation with LLMs, RAG and a range of techniques

- Using a tool like AirOps to increase the number of people that can build with LLMs internally

- Be organized with your prompts, learnings and accumulated best practices

- Keep improving as the modern AI stack improves and more technologies emerge

Are you ready to take your content operations to the next level with the power of LLMs? At AirOps, we're here to help you build and scale AI-powered workflows that drive efficiency, quality, and growth. Get started with AirOps today and experience the difference our platform can make for your content team.

Win AI Search.

Increase brand visibility across AI search and Google with the only platform taking you from insights to action.

FAQs

Get the latest on AI content & marketing

Get the latest in growth and AI workflows delivered to your inbox each week

.avif)

.png)