AI Visibility Metrics That Matter: What to Track and Why in 2026

- Citation share and competitive share of voice show whether AI systems treat your brand as a trusted source

- Mention rate and sentiment reveal how often and how favorably AI tools describe you

- Prompt packs turn raw visibility data into organized, repeatable testing programs

- Branded search trends and assisted conversions measure impact better than raw AI referral clicks

- Weekly audits convert visibility gaps into structured content updates that compound over time

AI visibility tools reveal how often answer engines like ChatGPT, Perplexity, and Google AI Overviews mention your brand. These metrics matter because buyers now turn to AI tools first, long before they open a traditional search results page.

AI visibility data answers three practical questions: where you appear, where competitors appear instead, and how AI systems describe your company. This article explains which metrics deserve attention, how to interpret them responsibly, and how to turn visibility insights into concrete content actions.

Why AI visibility metrics matter now

Traditional SEO metrics track rankings, clicks, and impressions. They don't show whether AI systems cite your content when people ask high-intent questions.

AI visibility metrics close that gap. They measure a new form of discovery that analytics platforms cannot see on their own. If an AI answer engine recommends your competitor five times more often than it recommends you, rankings alone will not tell that story.

Buyer behavior has already changed:

- People ask AI tools for product comparisons, vendor shortlists, and recommendations

- A single AI answer often replaces multiple searches

- A citation in an AI response can shape consideration more than a top organic result

These shifts create a new layer of brand presence that marketing teams need to measure and manage.

Three trends explain why this matters:

- Buyers skip result pages and move directly to AI answers

- Mentions in AI responses influence decisions as much as traditional rankings

- Hidden competition grows when teams track SERPs but ignore AI visibility

How mature is AI visibility measurement today?

AI visibility tracking remains an emerging discipline. No industry-wide benchmarks exist yet, and tools use different methods to collect and present data.

As Aleyda Solis explained in a recent AirOps webinar,

“SEOs must rethink how they measure success — AI overviews change what visibility looks like.” — Aleyda Solis

Her point captures the core challenge. Traditional SEO dashboards no longer tell the whole story.

AI outputs also vary over time, making tracking less precise than established SEO metrics.

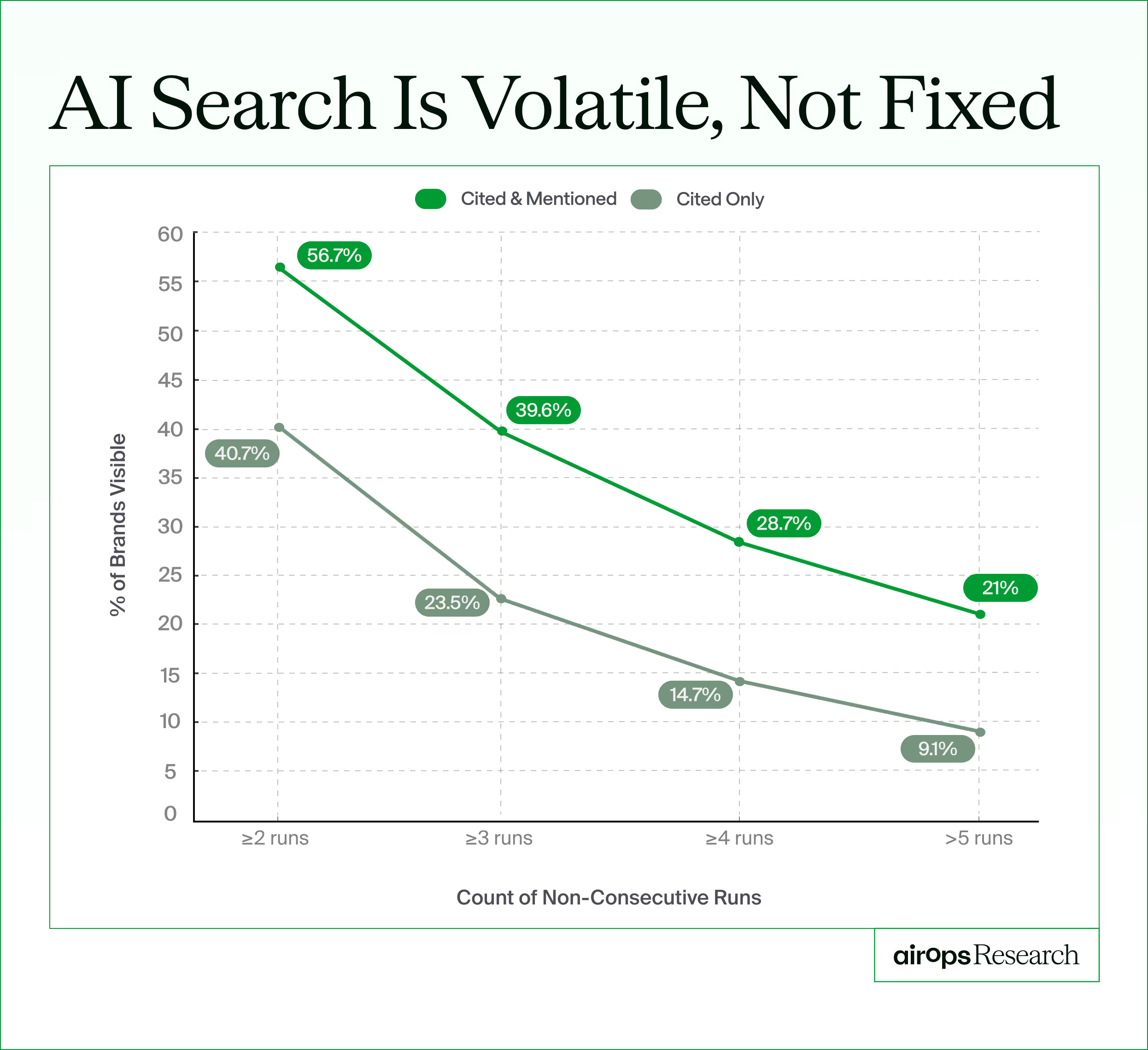

Research from AirOps highlights this volatility. Only 30% of brands stay visible from one answer to the next in AI search results, and just 20% remain visible across five consecutive runs. That instability makes consistent tracking far more valuable than occasional spot checks.

Even with these limitations, the data still delivers real value. Directional insights beat guesswork. Patterns over time reveal where visibility improves and where it slips. Teams that consistently track AI presence make better decisions than those that rely on intuition alone.

Five AI visibility metrics worth tracking

Most dashboards offer dozens of numbers. In practice, five indicators provide the clearest signals.

1. Citation share

Citation share measures how often AI responses cite your content compared to competitors for a specific set of prompts.

If you test 100 prompts and AI systems cite you 18 times while citing a competitor 47 times, the picture becomes clear. Citation share answers a direct question: do AI tools recognize your brand as a trusted source?

Low citation share usually points to shallow content, outdated pages, or unclear answers to common questions. It signals where refreshes and structural improvements will make the biggest difference.

2. Competitive share of voice

Share of voice tracks how often your brand appears versus competitors across relevant AI queries.

You might dominate technical topics while a competitor controls introductory guides. Tracking these patterns helps you decide where to defend existing ground and where to expand coverage.

3. Mention rate

Mention rate tracks how often your brand name appears in AI-generated answers.

Two outcomes matter here:

- A citation with a link, which can drive measurable traffic

- A mention without a link, which still shapes awareness and perception

Many purchase decisions begin with an unlinked recommendation. Because most AI mentions originate from third-party sources, mention rate often reveals how well your reputation travels beyond your own content.

4. Sentiment score

Sentiment score captures the tone AI models use when they describe your brand.

An AI system might mention you frequently but frame you poorly. It might highlight missing features, outdated information, or unfavorable comparisons. Tracking sentiment helps you catch those narratives early and correct the underlying content.

5. Drift and volatility

Drift tracks how AI outputs change over time. As models retrain on new data, their descriptions of brands evolve.

A sudden drop in citations usually signals one of three issues:

- Competitors published stronger content

- Your pages grew outdated

- Models learned new information that changed how they answer

Regular monitoring helps you respond before negative shifts become the default story.

What do we do with LLM visibility data?

Most teams stop at the dashboard. The numbers feel interesting, but they do not automatically turn into action.

Real value appears when metrics connect to repeatable workflows. These steps convert visibility data into a practical operating rhythm for content, SEO, and growth teams.

Build a focused prompt tracking pack

Start with the questions your audience actually asks.

Create a list of 20–50 prompts that cover:

- High-intent product comparisons

- Category definitions

- Implementation questions

- Use-case decisions

Organize them by topic cluster and buyer intent.

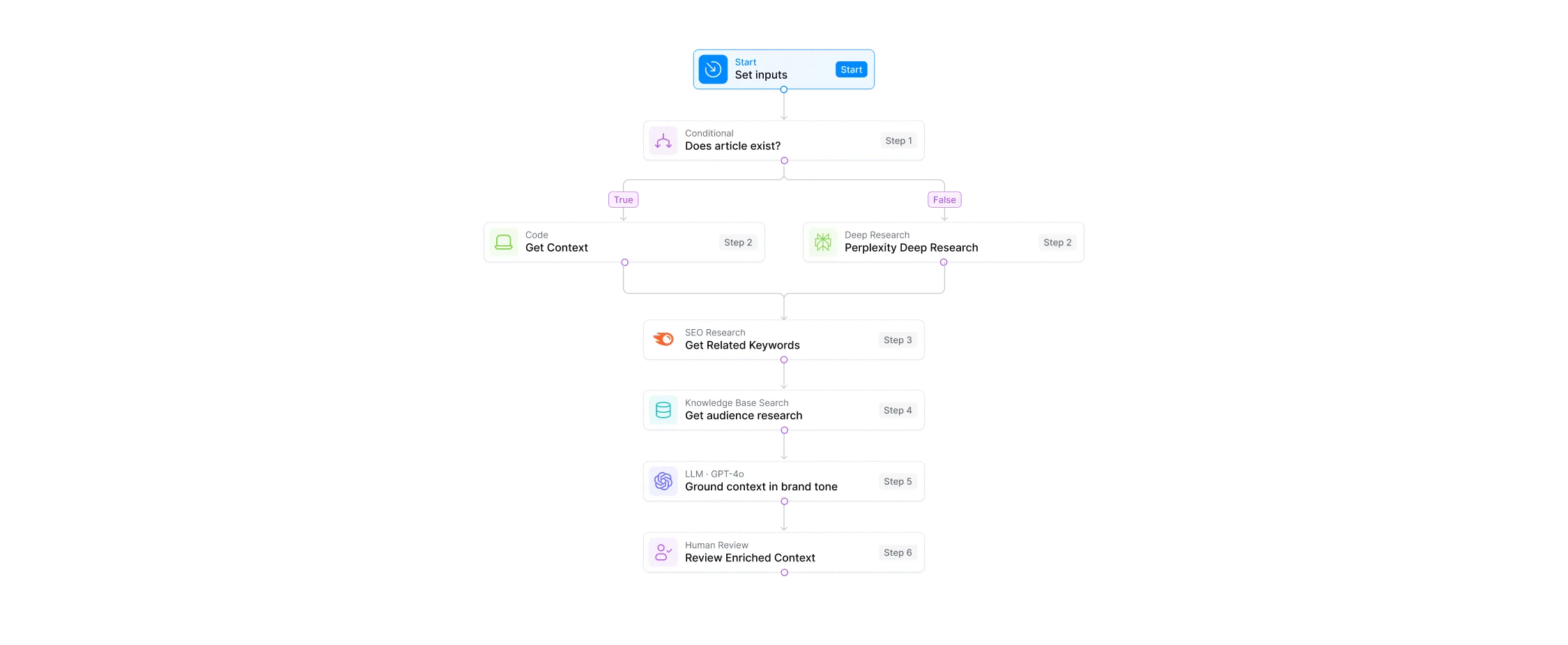

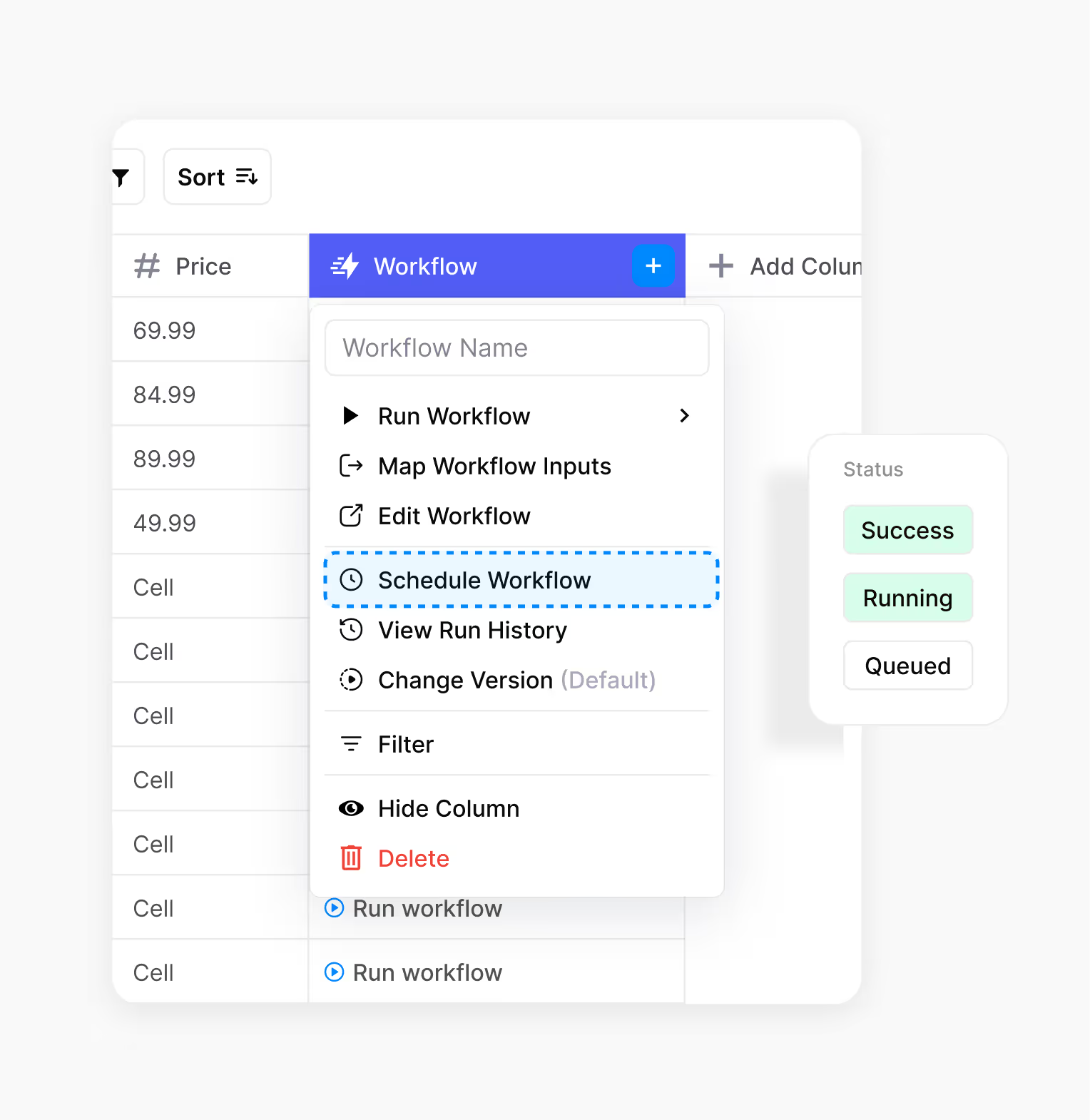

Many teams use AirOps to centralize prompt packs, automate testing across models, and track results over time. That approach turns visibility work into a structured process instead of a manual checklist.

Run simple, consistent audits

Complex processes slow teams down. A basic weekly routine works best:

- Run your saved prompts

- Record which brands appear

- Note citations and sentiment

- Log results in one place

Trends over time matter more than any single snapshot.

Turn gaps into content decisions

Visibility data highlights specific opportunities:

- A competitor appears for a topic where you have no content

- You rank well in search but never appear in AI answers

- AI tools cite outdated pages instead of your newer work

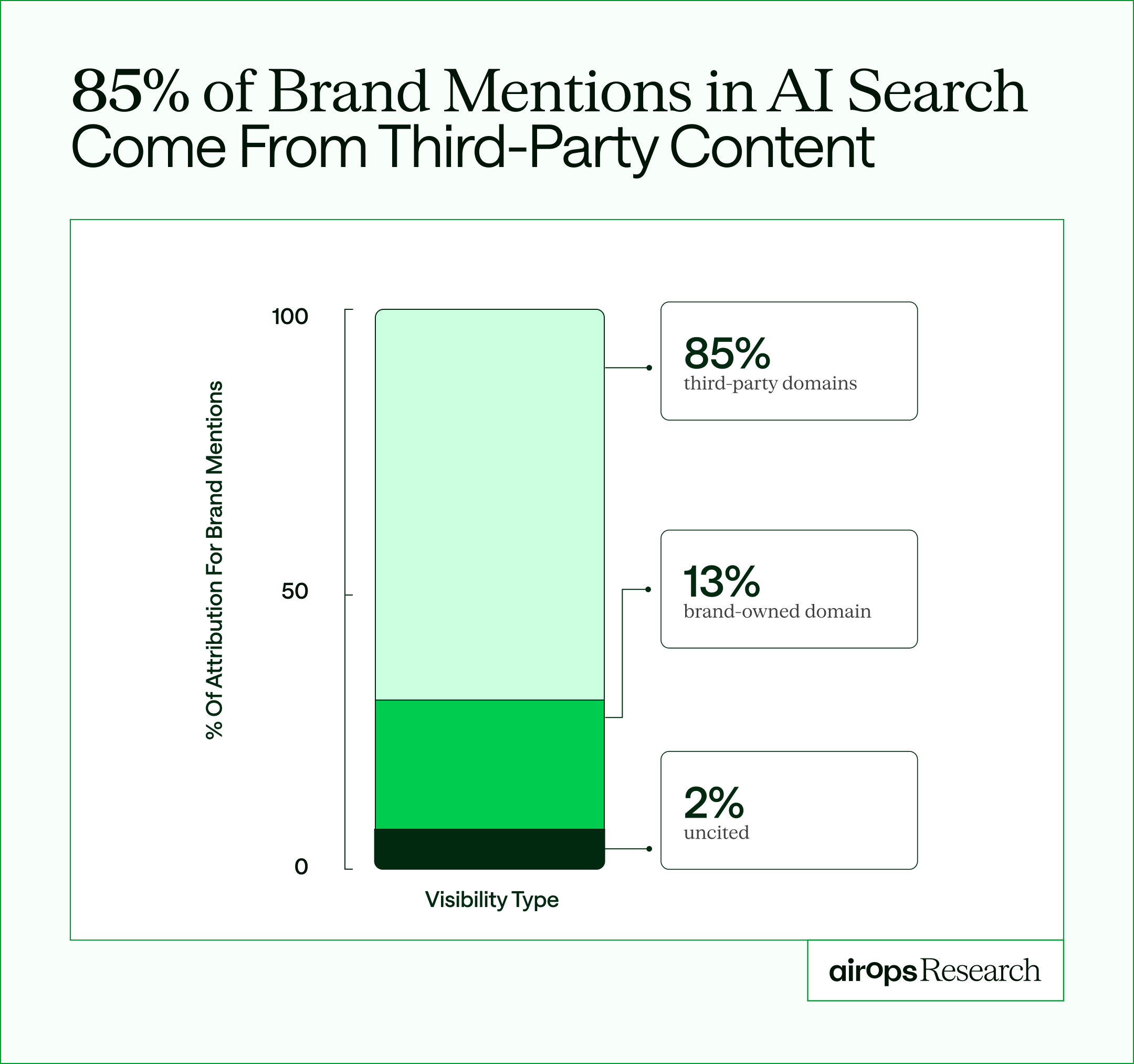

It's also important to look beyond your own website. AirOps research shows that approximately 85% of brand mentions in AI search originate from third-party pages rather than the brand’s own domain.

That means reviews, community discussions, partner content, and external validation play a major role in how AI systems describe you. Visibility gaps often point to off-site credibility issues as much as on-site content problems.

Many teams connect visibility data to content refresh workflows, so updates start from clear briefs instead of guesswork.

Prioritize by intent and impact

Not every gap deserves attention today. Focus first on the changes most likely to influence real buying decisions.

Work in this order:

- High-intent buyer queries

- Core category topics

- Long-tail educational questions

Fast feedback loops matter more than perfect analysis. Act on the clearest opportunities first, then refine the program over time.

In most organizations, this process works best as a shared effort between content and SEO, with RevOps or analytics providing measurement support. A monthly leadership review keeps priorities aligned and resources focused on the highest-impact updates.

After teams begin tracking visibility and closing gaps, a practical question naturally follows: how do we judge impact when AI traffic still looks small?

How should teams evaluate AI traffic when it is currently small?

Many leaders hesitate because AI referral traffic still looks modest.

That's normal at this stage. The value of AI visibility shows up in three practical signals that matter long before click volume grows.

Branded search as a leading indicator

When AI tools mention your brand more often, people tend to search for you by name afterward. Increases in branded search volume often follow improvements in AI visibility.

Track these metrics together to see the relationship.

Referral traffic where it exists

Some AI platforms already send measurable visits. Perplexity includes source links, and Google AI Overviews link out regularly. Use UTM parameters and referrer reports to capture what you can today.

Treat this traffic as incremental validation rather than the primary goal.

Pipeline influence

Early research behavior shapes later choices. Even when AI answers do not produce a click, they influence shortlists and evaluations.

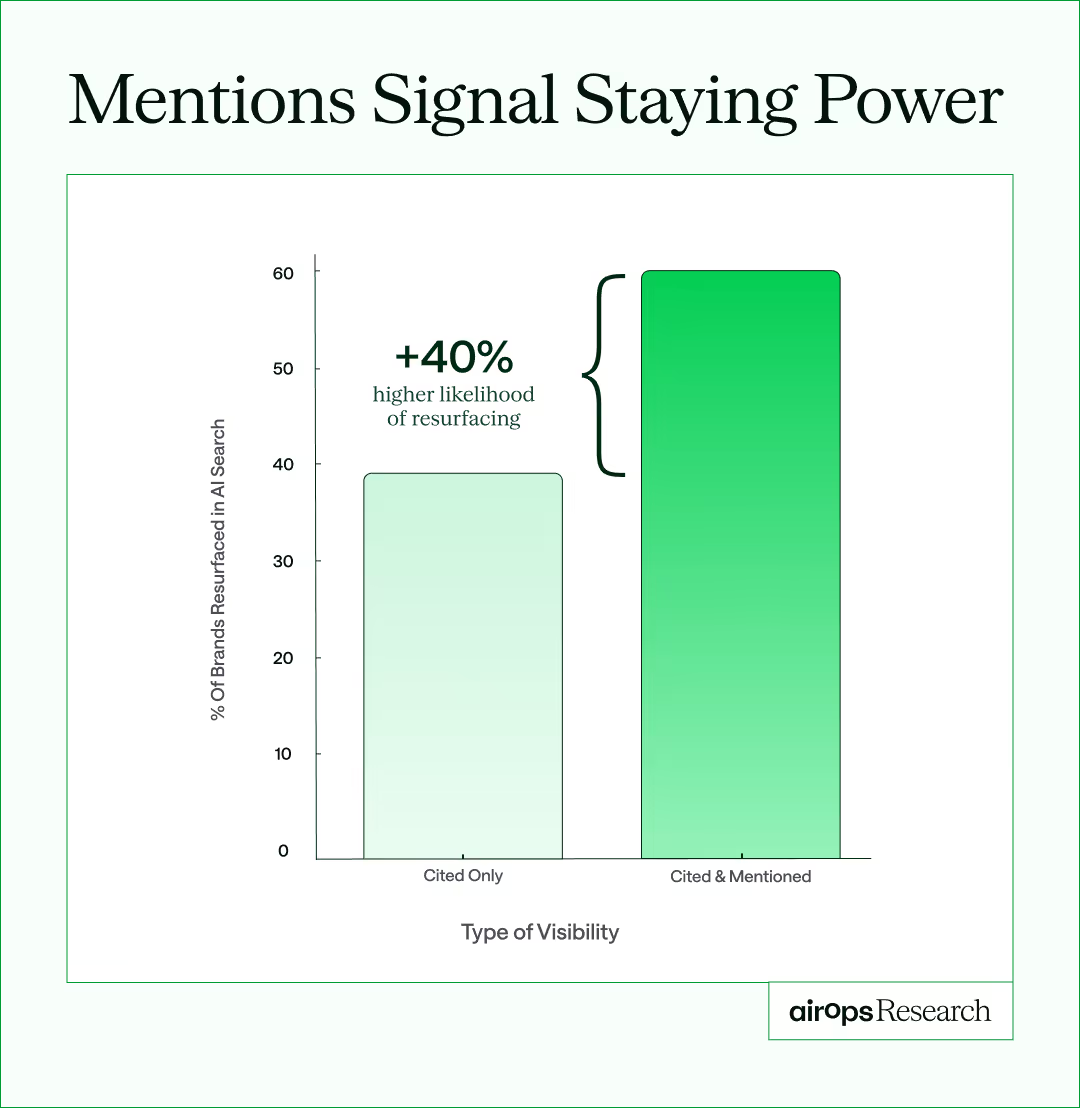

AirOps research found that brands earning both citations and mentions are 40% more likely to resurface across multiple runs than citation-only brands. Mentions stabilize visibility, even when they never turn into direct traffic.

Think of AI visibility the way you think about PR or brand search. The impact appears upstream of direct attribution, but it still affects real buying decisions.

How to choose the right AI visibility tool

The best tool depends on your team size, budget, and goals. Most options fall into three broad categories.

Enterprise platforms fit large marketing organizations that manage multiple brands or regions. These tools usually provide multi-model tracking, competitor benchmarking, and API access for deeper reporting.

SEO platform add-ons work well for teams already invested in tools like Semrush or Ahrefs. These options add AI visibility modules inside dashboards marketers already use, which keeps reporting simple and familiar.

AI-native trackers serve smaller teams or focused AEO programs. They often cost less and specialize in purpose-built monitoring without the overhead of full SEO suites.

Evaluation criteria that matter

Ask four practical questions before you choose a tool:

- Model coverage: Does the platform track ChatGPT, Perplexity, Google AI Overviews, and Claude?

- Prompt flexibility: Can you test your own prompts, or do you have to rely on preset templates?

- Historical data: Can you view trends over time instead of single snapshots?

- Integrations: Can the data connect to your content, analytics, or reporting stack?

Avoid tools that lock you into rigid prompt templates or narrow model support. Flexibility matters more than elaborate dashboards, especially as AI platforms continue to evolve.

Whatever tool you choose, focus on a simple path from measurement to action. The strongest setups connect visibility tracking to the systems teams already use for content planning and updates, so insights turn into work without extra handoffs.

Current limitations of AI visibility tools

AI visibility data helps teams make better decisions, but it is not a perfect measurement. Clear expectations prevent bad conclusions and keep teams focused on the right signals.

- Outputs change often: AI models respond differently to the same prompt over time, and small wording changes or model updates can shift results. Treat visibility trends as directional signals rather than precise scores.

- History is still shallow: Most tools store only a few months of data, which makes long-term comparisons difficult today. Build your own baseline now so future trends have meaningful context.

- Model access varies: Some platforms limit automated queries or adjust how data can be collected. Coverage differs between tools, and no single dashboard captures every AI system equally well.

- Attribution remains indirect: A citation in an AI answer rarely connects neatly to a conversion. Use proxy measures like branded search growth, referral traffic, and pipeline influence to judge impact instead.

These limits don't make the data useless. They simply change how teams should use it. Visibility metrics work best as a compass for content decisions, not as an exact revenue counter.

Put insights to work, starting this week

You can start improving AI visibility right away, even without new software.

Follow a simple five-step routine:

- List ten high-value questions your customers ask about your category

- Test those prompts manually in ChatGPT, Perplexity, and Google AI Overviews

- Record which brands appear most often

- Note citations and overall sentiment

- Save the results as a baseline to compare over time

This small process creates a clear picture of where you stand today and where content updates can deliver the biggest gains.

As your prompt list grows, manual tracking becomes hard to maintain. That is where a structured system helps. AirOps gives teams a practical way to manage prompt packs, monitor visibility across models, and turn insights into prioritized content actions without spreadsheets or repetitive testing. Instead of guessing what to fix next, you move directly from data to clear updates.

Book a demo to see how AirOps helps teams track AI visibility, prioritize gaps, and turn insights into repeatable content wins.

Win AI Search.

Increase brand visibility across AI search and Google with the only platform taking you from insights to action.

FAQs

Get the latest on AI content & marketing

Get the latest in growth and AI workflows delivered to your inbox each week

.avif)