Tracking LLM Brand Citations: A Complete Guide for 2026

- LLM visibility measures whether AI platforms mention your brand, describe it accurately, and link to your content, rather than where you rank in Google

- AI answers change by platform and by prompt, making structured, multi-platform tracking essential

- Competitive share of voice reveals which brands dominate AI recommendations for real buyer questions

- Consistent weekly testing uncovers trends and catches inaccuracies before they shape buyer perceptions

- Clear, answerable content, strong authority signals, and accessible site structure drive reliable AI citations

Your brand can rank on page one of Google and still disappear from the answers buyers get from ChatGPT, Perplexity, and Gemini.

More people now research products through AI tools before they ever open a browser tab. AI answer engines summarize options, compare vendors, and recommend solutions long before a visitor reaches your website. Yet most marketing teams have no reliable way to see how AI platforms represent their brand.

Visibility also shifts fast. AirOps research found only 30% of brands stayed visible from one answer to the next, and just 20% held presence across five consecutive runs. That volatility makes one-off checks misleading. A simple tracking cadence gives you a clearer signal and a baseline you can improve.

This guide explains how LLMs decide which brands to cite, how to measure AI visibility credibly, and how to compare your presence against competitors in a practical, repeatable way.

Why brand citation tracking in AI answer engines matters

AI answer engines shape buyer perceptions earlier than any landing page. When a prospect asks, “What’s the best project management tool for remote teams?” the response builds a shortlist instantly.

If your brand appears with accurate, positive context, you earn consideration. If a competitor appears instead, you lose ground before the sales cycle even begins.

LLM citation tracking measures how often AI systems:

- Mention your brand

- Describe your product accurately

- Recommend you alongside competitors

- Link back to your content as a source

These signals matter because AI discovery now influences purchase behavior. Recent studies show more than a third of consumers begin research with AI tools instead of traditional search engines.

How LLM citation tracking differs from traditional rank tracking

Traditional rank tracking answers a simple question: where do my pages appear for specific keywords?

LLM citation tracking answers a different question: does my brand appear at all in AI answers, and how well do those answers represent me?

Position versus presence

In SEO, position matters. Rank #1 beats rank #5.

With LLMs, presence matters first. If your brand is not mentioned, you have zero visibility, regardless of Google rankings.

Keywords versus prompts

Buyers do not type keywords into ChatGPT. They ask natural language questions like:

- “Which CRM works best for small sales teams?”

- “What tools help B2B marketers track intent signals?”

Tracking AI visibility requires prompts that mirror how real buyers ask questions, not how SEOs build keyword lists.

Static rankings vs variable responses

Google rankings change gradually. LLM answers can change dramatically based on:

- Timing

- Platform

- Small differences in phrasing

- New content entering the index

This variability makes structured, ongoing monitoring essential.

How LLMs decide which brands to cite and recommend

AI platforms choose sources using a mix of relevance, authority, and accessibility signals.

Content relevance and answerability signals

LLMs favor content that clearly answers questions in structured formats. Pages with clear answers, structured headings, logical organization, and concise explanations appear more often in AI answers.

This concept is called answerability. The easier it is for AI systems to extract an answer, the more likely your brand appears.

Authority and trust indicators

AI systems assess authority through multiple signals: backlinks from respected sources, expert authorship with verifiable credentials, brand mentions across the web, and consistent entity information.

A brand with strong external validation appears more trustworthy than one with limited digital footprint.

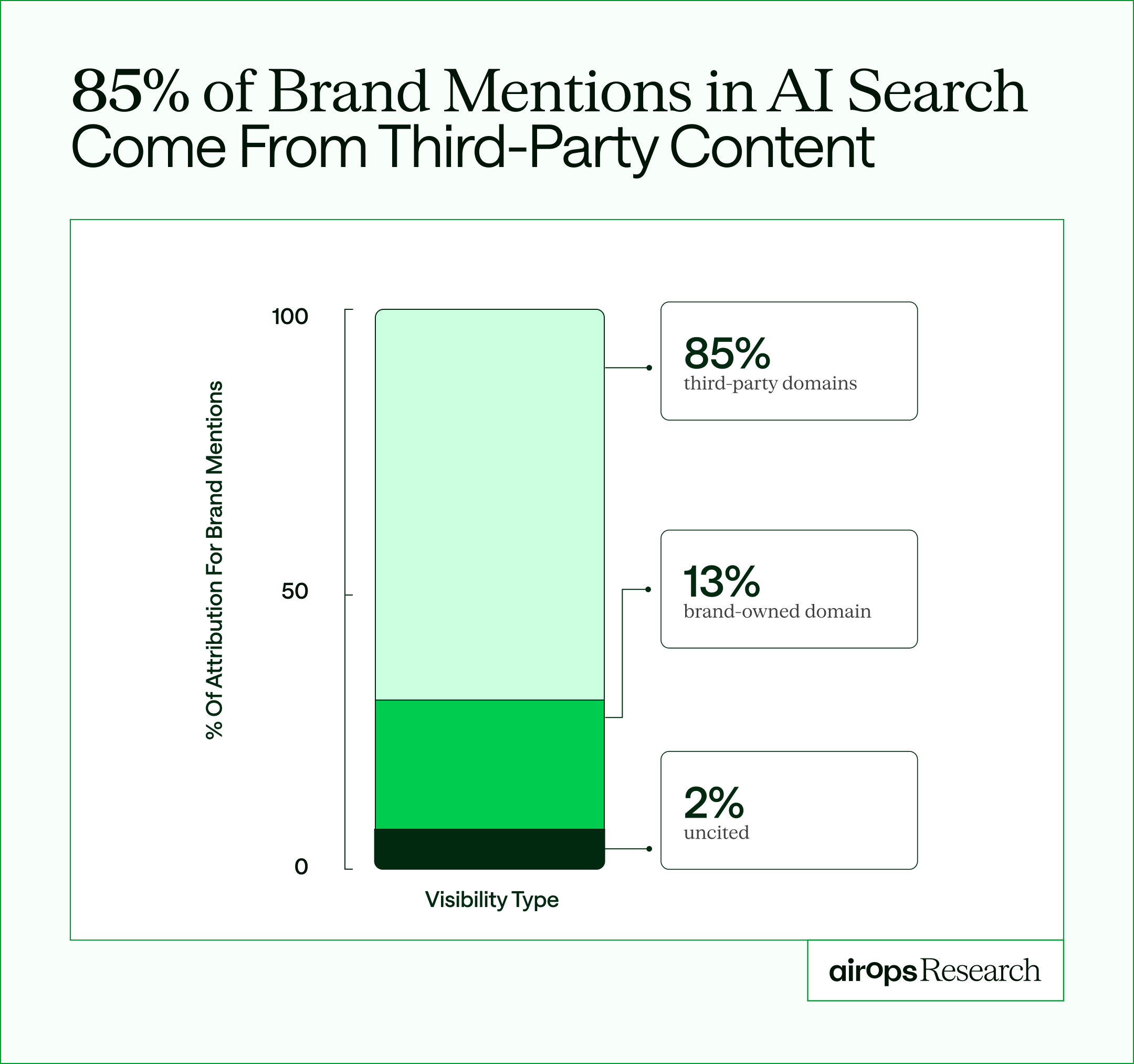

Off-site sources often drive that validation. AirOps research found 85% of brand mentions came from third-party pages, not owned domains. Track which external sources show up for competitors, since those pages often shape the vendor shortlists AI systems generate.

Technical accessibility and structured data

LLMs can only cite content they can parse. Visibility improves when you provide:

- Clean HTML structure

- Schema markup

- Fast-loading pages

- Crawlable, indexable content

The core LLM brand visibility metrics to track

To measure AI visibility credibly, you need metrics designed for LLM behavior and not recycled SEO KPIs. This results in a brand visibility score, that shows your influence across LLMs, Google AI overviews and AI mode.

Here are the signals that actually matter.

1. Mention rate

How often does your brand appear and get mentioned when buyers ask relevant questions?

Example: If you test 20 prompts and your brand appears in 12 responses, you have a 60% brand visibility score.

This is the foundational AI visibility metric.

2. Sentiment

Not all mentions are equal.

Score responses as:

- Positive

- Neutral

- Negative

A high presence rate with negative sentiment signals a messaging problem.

3. Citation rate

Some platforms include source links. Others only reference brands.

Measure:

- How often LLMs link to your content

- Which pages they cite

- How frequently competitors receive linked citations

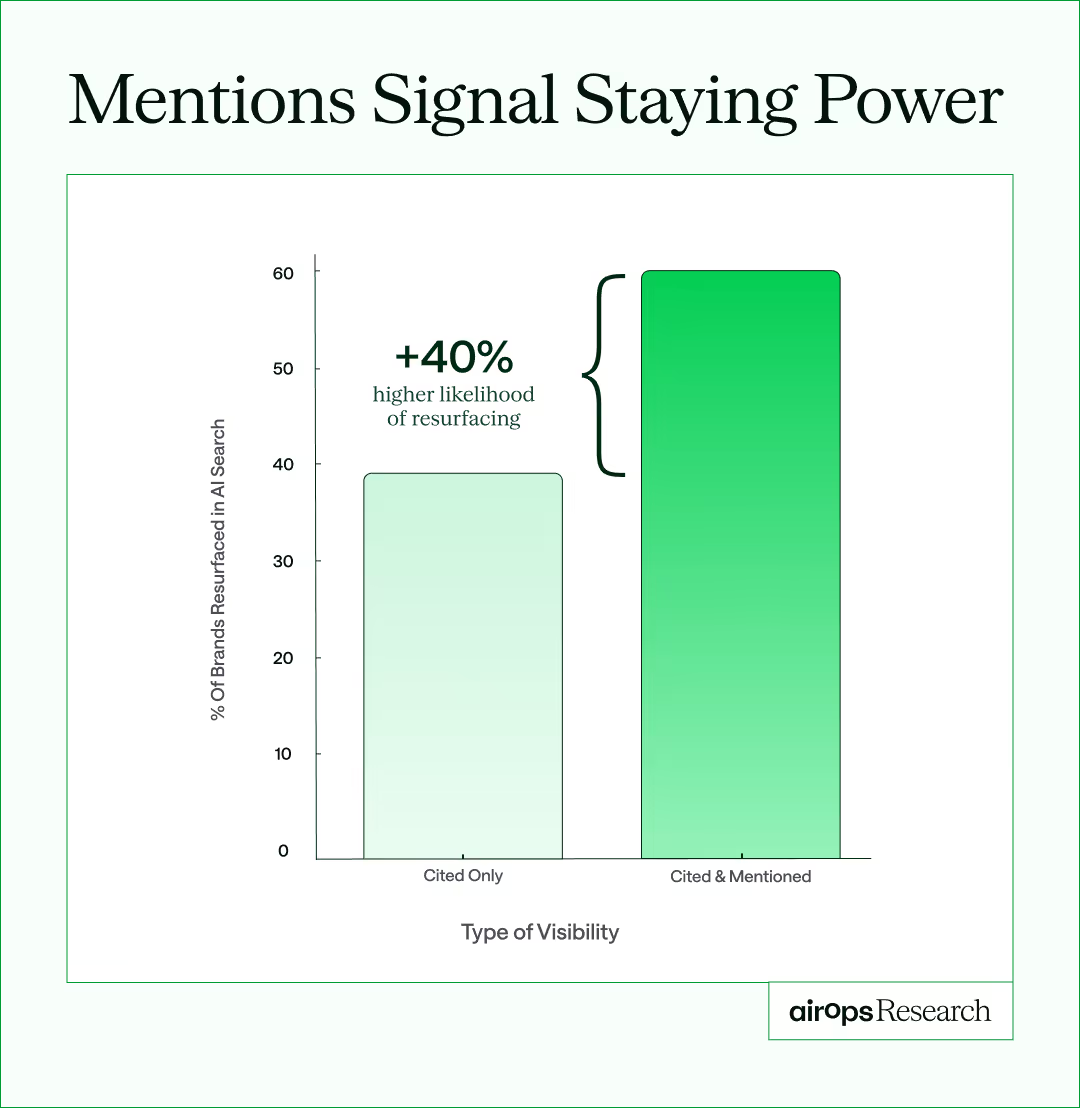

Mentions and citations together can also signal more stable visibility. AirOps research found that brands that earned both a mention and a citation were 40% more likely to reappear across consecutive answers. Track presence and citation rate together to see whether you’re building repeat visibility rather than just one-off mentions.

4. Competitive share of voice

Brand visibility only matters in context.

If you appear in 40% of relevant responses and a competitor appears in 75%, you have a serious visibility gap. Share of voice shows that rate compared to your competitors.

How to track your brand across ChatGPT, Perplexity, and Gemini

Tracking LLM visibility works best as a repeatable process. The goal stays simple: measure how often AI systems mention your brand, how accurately they describe you, and how you compare to competitors over time.

Here is a practical framework any team can follow.

Step 1: Build a prompt library that reflects real buyer questions

Start by creating a structured set of prompts that mirror how prospects actually search.

Aim for 20–30 prompts that cover:

- Category discovery queries

- Product comparison questions

- Problem-solution scenarios

- Implementation and use-case questions

Example prompts:

- “Best analytics platform for ecommerce brands”

- “Klaviyo vs HubSpot for mid-market companies”

- “How to improve customer onboarding at scale”

- “What software helps reduce churn for SaaS companies?”

Your prompt library becomes the foundation for consistent measurement. The more closely it matches real buyer intent, the more accurate your tracking will be.

Step 2: Test the same prompts across multiple AI platforms

Run each prompt on all major AI answer engines:

- ChatGPT

- Perplexity

- Google Gemini

- Claude

Every platform pulls from different sources and ranking logic. A brand that appears prominently in Perplexity might be missing entirely from ChatGPT. Tracking across platforms gives you a complete picture instead of a single-channel snapshot.

Step 3: Score every response with the same criteria

For each prompt and platform, evaluate the output using a simple, repeatable scoring model:

- Mentioned or not mentioned: Did your brand appear at all?

- Accurate or inaccurate: Was the description correct?

- Sentiment: Positive, neutral, or negative

- Citation type: Linked source or mention only

This scoring turns subjective impressions into measurable data.

Step 4: Measure competitive context

Once you score responses, add a competitive layer:

- How often does your brand appear compared to competitors?

- Which platforms favor certain brands more than others?

- Where do answers conflict or disagree?

This analysis creates a true AI share-of-voice view instead of isolated brand checks.

Step 5: Track results on a regular cadence

LLM responses change frequently. New content enters indexes, algorithms update, and phrasing shifts outcomes.

A weekly tracking schedule lets you:

- Spot visibility changes early

- Catch inaccurate answers before they spread

- See which platforms trend up or down

- Measure whether content updates improve citations

Consistency matters more than any single data point. Many teams manage this process with a simple spreadsheet at first, then move to a dedicated tracking tool once the workflow becomes hard to maintain manually.

With this process in place, you move from guesswork to clear visibility into how AI platforms represent your brand.

Tools for monitoring LLM brand citations

Several approaches exist for tracking AI visibility, from dedicated platforms to lightweight internal processes.

Dedicated AI visibility platforms

Tools built specifically for AI search monitoring automate prompt testing and organize results consistently. AirOps Insights tracks how brands appear across ChatGPT, Perplexity, Gemini, and Claude, so teams can measure presence over time and spot when answers change.

This approach reduces the manual work of copying outputs into spreadsheets and makes it easier to track accuracy, sentiment, and citations on a regular cadence.

SEO tools with AI tracking features

Some traditional SEO tools now include basic LLM monitoring. These can help, but they often lack:

- Prompt-level analysis

- Accuracy scoring

- Platform comparisons

Manual tracking approaches

Teams can begin with a simple spreadsheet to record prompts, scores, and weekly changes. This approach works for pilots, but becomes hard to maintain at scale.

How to measure competitive share of voice in AI search

The tracking process measures your brand performance. Share of voice analysis compares that performance directly to competitors.

Share of voice in AI search answers a simple question: when buyers ask AI systems for help, which brands show up most often?

Unlike traditional SEO competitor tracking, this analysis focuses on brand mentions inside AI answers rather than keyword rankings.

Identify which competitors AI systems recommend

Start with category-level prompts that naturally surface vendor lists, such as:

- “What are the best tools for [use case]?”

- “Which platforms help with [problem]?”

- “Top software for [industry] teams”

These questions reveal which brands LLMs treat as authoritative options in your space.

Calculate AI share of voice

Use the same prompt library you built for brand tracking and tally results across platforms.

For each brand, measure:

- Total number of appearances

- Percentage of prompts where they appear

- Platforms where they appear most often

This metric quickly shows who dominates AI recommendations and where gaps exist.

To calculate share of voice, use the following formula: Share of Voice (%) = (Your Brand’s Mentions or Visibility ÷ Total Mentions or Visibility of All Brands) × 100

Here are several methods for calculating share of voice across multiple channels:

Paid Media: Use ad impressions or spend to calculate your ad visibility relative to competitors.

Example: Your ads received 1M impressions, and total competitor impressions were 10M → SOV = 10%.

Organic Search: Measure your share of total keyword rankings or estimated traffic.

Example: Your domain ranks for 500 of the 5,000 tracked keyword impressions → SOV = 10%.

Social Media: Track mentions, hashtags, or engagement volume compared to competitors.

Example: Your brand had 2,000 mentions out of 20,000 total → SOV = 10%.

PR Coverage: Count the number of news mentions, editorial placements, or backlinks across media outlets.

AI Answer Engines (ChatGPT, Perplexity, Gemini): Measure how often AI systems cite or mention your brand compared to competitors in AI-generated answers.

Example: Your brand appears in 120 of 1,000 total AI answers within your category → AI SOV = 12%.

Track competitive movement over time

Share of voice only becomes meaningful when measured consistently.

Regular monitoring helps you spot:

- Competitors gaining visibility after publishing new content

- Sudden drops in your own mentions

- Platforms where certain brands outperform others

- Emerging rivals entering AI answers for the first time

When a competitor begins appearing more frequently, it often signals one of three things:

- They published new high-answerability content

- They earned new authority signals

- Their messaging better matches buyer intent

Tracking these shifts early lets you respond before perceptions harden.

Building your AI search analytics dashboard

A useful dashboard focuses on four signals:

- Mention rate over time

- Citation rate

- Competitive share of voice

Visualizations to include:

- Weekly trend lines

- Platform comparisons

- Top prompts by performance

- Competitors gaining or losing visibility

Set alerts for:

- Sudden drops in presence

- Spikes in negative sentiment

- Major competitor movements

Common LLM tracking mistakes to avoid

AI visibility tracking breaks down when teams make a few common mistakes:

- Tracking mentions without verifying accuracy

- Using generic prompts that miss real buyer intent

- Ignoring differences between AI platforms

- Treating citation tracking as a one-time project

Counting mentions alone can mislead you when details are wrong or outdated. Broad prompts like “best CRM” rarely match how buyers actually search. Each AI platform pulls from different sources, so single-channel tracking gives an incomplete view. And one-time audits miss the ongoing shifts that regular monitoring reveals.

How to improve your brand's LLM citation rate

Tracking reveals where visibility falls short, but progress requires a clear path from insight to action. Effective improvement turns what you learn from monitoring into specific content updates. Refresh outdated pages, add structured answers to high-intent questions, and publish clearer comparison resources that speak directly to buyer needs. A repeatable refresh workflow keeps this work consistent and measurable instead of turning it into scattered rewrites.

Keep key pages fresh

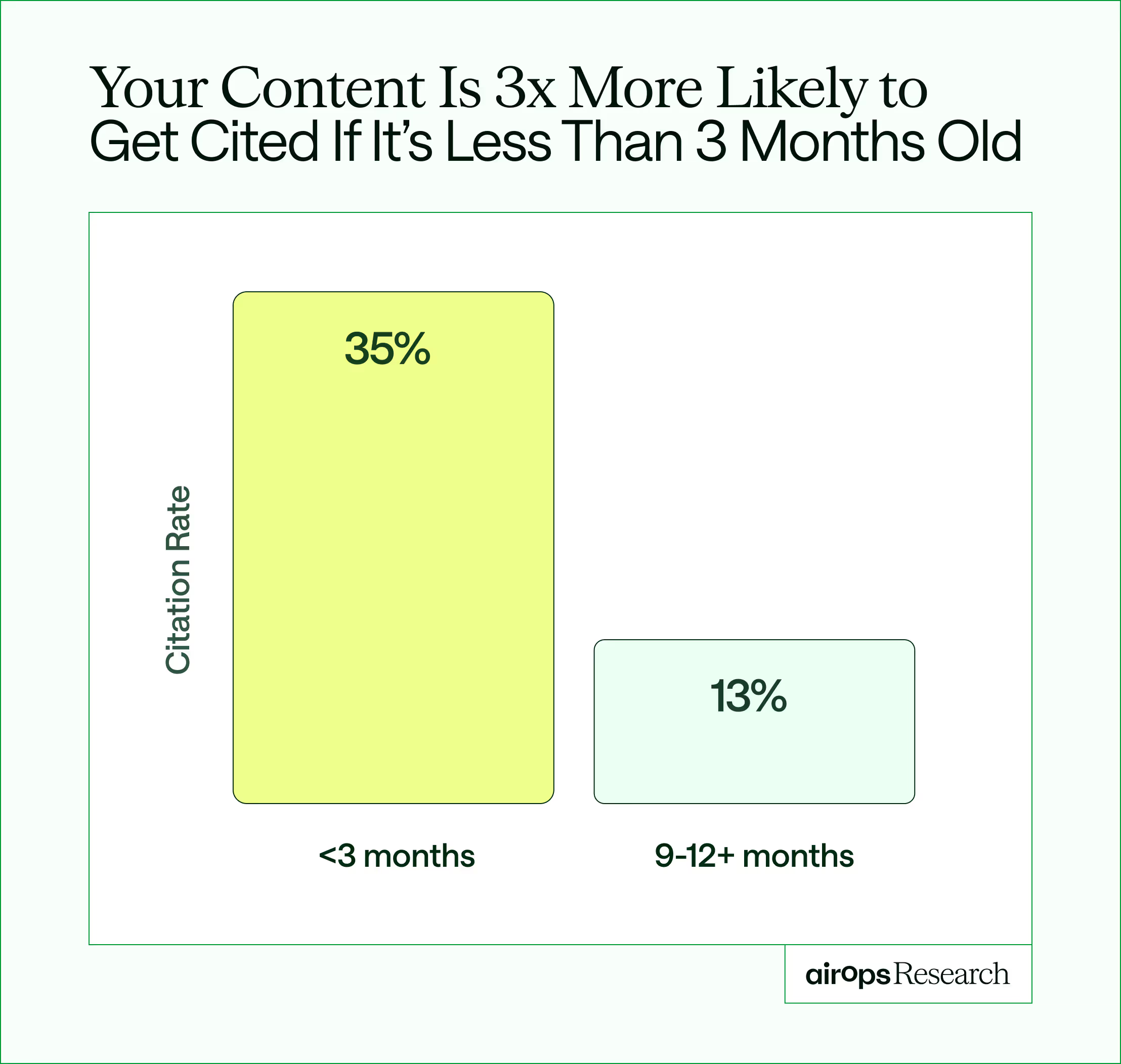

Freshness acts like a citation lever in AI search. AirOps research found that pages not updated quarterly were 3× more likely to lose citations.

Start with high-intent pages buyers and AI systems rely on most—comparison pages, pricing and packaging pages, core category guides, and solution pages.

Improve answerability

- Add clear question-and-answer sections

- Use structured headings

- Publish comparison content

- Address common buyer concerns directly

Content that speaks clearly to a defined audience shows up more often in AI answers. As Steve Toth, CEO of Notebook Agency, explains:

“When your content states who it’s for, mirrors the ICP’s terminology, and addresses common problems, the user context prompts the LLM to retrieve hyper-relevant content. That increases the likelihood your content will be recommended for high-intent queries.” — Steve Toth

Writing with explicit audiences in mind helps AI systems match your pages to the right buyer scenarios. Specificity beats generic coverage when models decide which brands to cite.

Strengthen authority signals

- Earn mentions on industry sites

- Publish expert-led content

- Maintain consistent brand information

Improve technical accessibility

- Add schema markup

- Simplify page structure

- Remove crawl barriers

- Improve page speed

Turn AI visibility into a measurable advantage

AI answer engines already shape how buyers compare options and build shortlists. Visibility in this environment no longer depends on keyword rankings alone. It depends on how often AI platforms mention your brand, how accurately they describe you, and how you compare to competitors in real conversations.

Teams that track LLM citations consistently can:

- Catch inaccuracies before they spread

- Close competitive visibility gaps

- Understand where each platform pulls information

- Measure real progress with credible metrics

Without structured tracking, brands stay blind to how AI systems represent them. With the right process, visibility becomes something you can measure, improve, and prove.

AirOps brings all of this into one system. You can monitor brand mentions across ChatGPT, Perplexity, Gemini, and Claude, track competitive share of voice, and see exactly which content updates will earn more accurate citations and stronger recommendations.

Book a demo to see how AirOps helps teams measure AI visibility, track competitors, and improve LLM citations at scale.

How often should I update my LLM citation tracking prompts to stay relevant?

Review and refresh your prompt library quarterly to match evolving buyer language and emerging use cases. As market terminology shifts and new competitor products launch, outdated prompts will miss how prospects actually phrase questions to AI systems.

Can negative brand mentions in AI answers hurt my sales pipeline?

Yes, inaccurate or negative AI responses can disqualify your brand before prospects ever visit your website. Buyers increasingly trust AI summaries as objective, so a single misleading description can remove you from consideration during the critical early research phase.

Do AI answer engines favor newer content over older authoritative pages?

AI systems balance recency with authority signals, but stale content loses ground over time. Pages with recent updates, current statistics, and fresh examples tend to surface more reliably than older pages with outdated information, even if the older content has stronger backlink profiles.

How do I know which AI platform matters most for my industry?

Run identical prompts across ChatGPT, Perplexity, Gemini, and Claude, then track where your target buyers actually spend time researching. B2B tech buyers may favor Perplexity for sourced answers, while general consumers often default to ChatGPT—platform priority should follow your audience behavior.

What should I do when an AI gives completely wrong information about my product?

Document the inaccuracy with screenshots, then prioritize updating your owned content with clear, structured corrections that directly address the misinformation. AI systems eventually re-index improved content, and consistent accurate information across your site and third-party sources helps correct the record over time.

Win AI Search.

Increase brand visibility across AI search and Google with the only platform taking you from insights to action.

Get the latest on AI content & marketing

Get the latest in growth and AI workflows delivered to your inbox each week

.avif)