LLM Optimization: Complete Guide to Techniques and Tools

- AI answer engines prioritize sources they can extract and quote cleanly, not pages that simply rank well

- Clear, declarative sentences and early definitions make content easier for LLMs to reuse in answers

- Ambiguous phrasing and heavy pronoun use reduce citation reliability and answer inclusion

- Sentence-level clarity and structure influence AI citations more than keyword repetition

- Citation frequency and brand mentions provide better visibility signals than traffic alone

Your content can rank first on Google and still stay invisible to people who ask ChatGPT or Perplexity instead of clicking search results. LLM optimization focuses on making your content extractable and citable by AI answer engines, so your brand shows up when those systems generate responses.

This guide explains how LLM optimization works, which writing techniques increase citation likelihood, and how to balance clarity, specificity, and SEO without sacrificing readability.

What is LLM optimization?

LLM optimization is the practice of shaping content so AI answer engines like ChatGPT, Perplexity, and Google Gemini can easily extract, understand, and cite it.

In engineering contexts, the term often refers to model tuning or retrieval systems. For marketers, LLM optimization means something simpler and more practical: when someone asks an AI a question related to your space, your content becomes the source it pulls from.

Traditional SEO aims to rank pages in a list of links. LLM optimization aims to become the quoted source inside the answer itself.

Why LLM optimization matters for brand visibility

Search behavior has shifted from scanning results to asking full questions and trusting a single synthesized response. As that shift accelerates, brands that do not appear in AI answers lose visibility—even if their pages continue to rank well in traditional search.

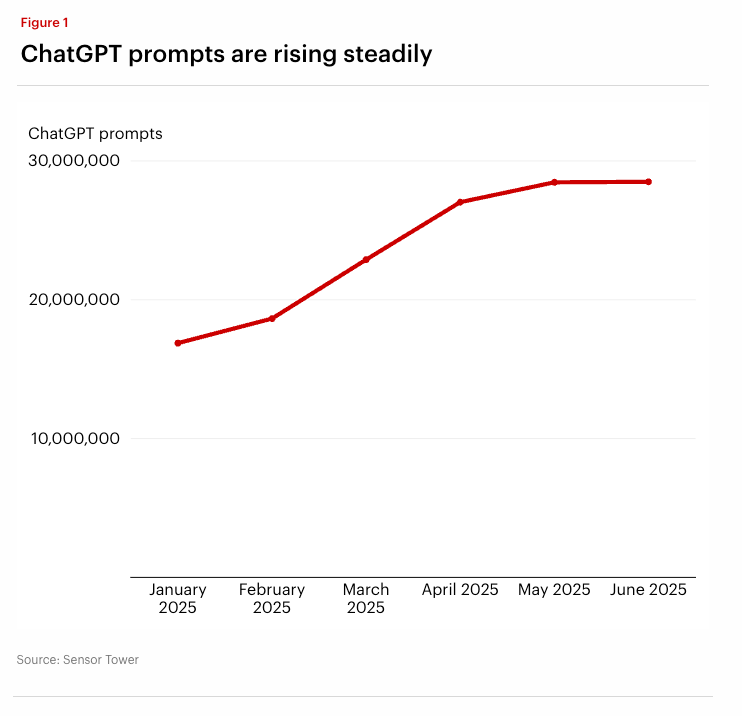

Bain & Company reported that ChatGPT prompt volume grew by nearly 70% in the first half of 2025. At the same time, SparkToro found that nearly 60% of Google searches in the EU end without a click. AI answers accelerate this shift by resolving questions directly on the results page, changing how visibility is earned and measured.

AI answers act as gatekeepers for brand authority

AI systems decide which sources to reference and which to ignore, shaping brand perception before a user ever visits a website. When competitors appear consistently in AI answers and you do not, they become the default authority in your category.

That impact compounds over time. Missing citations do more than reduce traffic. They weaken brand credibility, limit top-of-funnel influence, and redirect potential customers toward brands that AI systems surface more often.

As AI answers become the primary discovery layer, brand visibility increasingly depends on being referenced consistently across AI-generated responses—not simply appearing in traditional search results.

LLM optimization vs traditional SEO

LLM optimization does not replace SEO. It addresses a different discovery channel with different success criteria.

While the mechanics differ, AI search optimization and SEO are increasingly interconnected. Many of the same content decisions—clear structure, strong topical coverage, and credible sourcing—influence performance across both discovery paths.

SEO still matters for transactional and navigational queries. LLM optimization matters most for informational and evaluative questions where AI systems synthesize answers on the user’s behalf.

How phrasing affects whether LLMs cite your content

LLMs don't “read” content the way humans do—they extract, compare, and reuse it at the sentence level. Phrasing that is clear, direct, and unambiguous significantly increases the likelihood that your content gets cited in AI answers.

Use declarative sentences

LLMs favor sentences that state facts directly.

Good example: “LLM optimization focuses on making content extractable and citable by AI systems.”

Weak example: “LLM optimization is kind of about helping AI understand your content.”

Declarative phrasing reduces interpretation risk and increases citation reliability. In AirOps analysis of AI-cited pages, content using exact or close language matches, such as “what is,” “how to,” and other direct phrasing patterns, was far more likely to be selected as a source. AI systems consistently favor pages that answer questions in the same language users use to ask them.

Reduce ambiguity at the sentence level

AI systems extract content at the sentence and paragraph level. Each sentence should remain clear if lifted out of context.

Instead of: “This helps them understand it better.”

Write: “This structure helps AI systems extract definitions accurately.”

Using full nouns instead of pronouns improves extractability. Defining key terms near the top of a page or section further reduces misinterpretation, since AI systems often pull definitions from the first clear explanation they encounter.

Avoid rhetorical questions in core explanations

Rhetorical questions can add tone for human readers, but they introduce ambiguity for AI extraction. Use them sparingly and avoid them in sections intended to be cited.

When explaining a concept, state it directly rather than framing it as a question.

How to write content that is AEO optimized

Answer engine optimization depends less on clever writing and more on precision and structure.

Balance readability and specificity

Short sentences improve readability, but oversimplification reduces authority. Aim for clear sentences that still include concrete details, examples, or constraints.

A good test: could this sentence be quoted without losing meaning?

Control sentence complexity

Highly complex sentences increase the risk of partial extraction or misquotation. One idea per sentence improves inclusion accuracy.

Compound sentences work best when both clauses remain independently meaningful.

Use keywords naturally, not repetitively

Keyword repetition does not help LLMs the way it once helped search engines. AI systems rely on semantic understanding.

Use your primary keyword where it fits logically, then rely on related phrases and precise language to reinforce meaning.

Trusted LLM optimization techniques

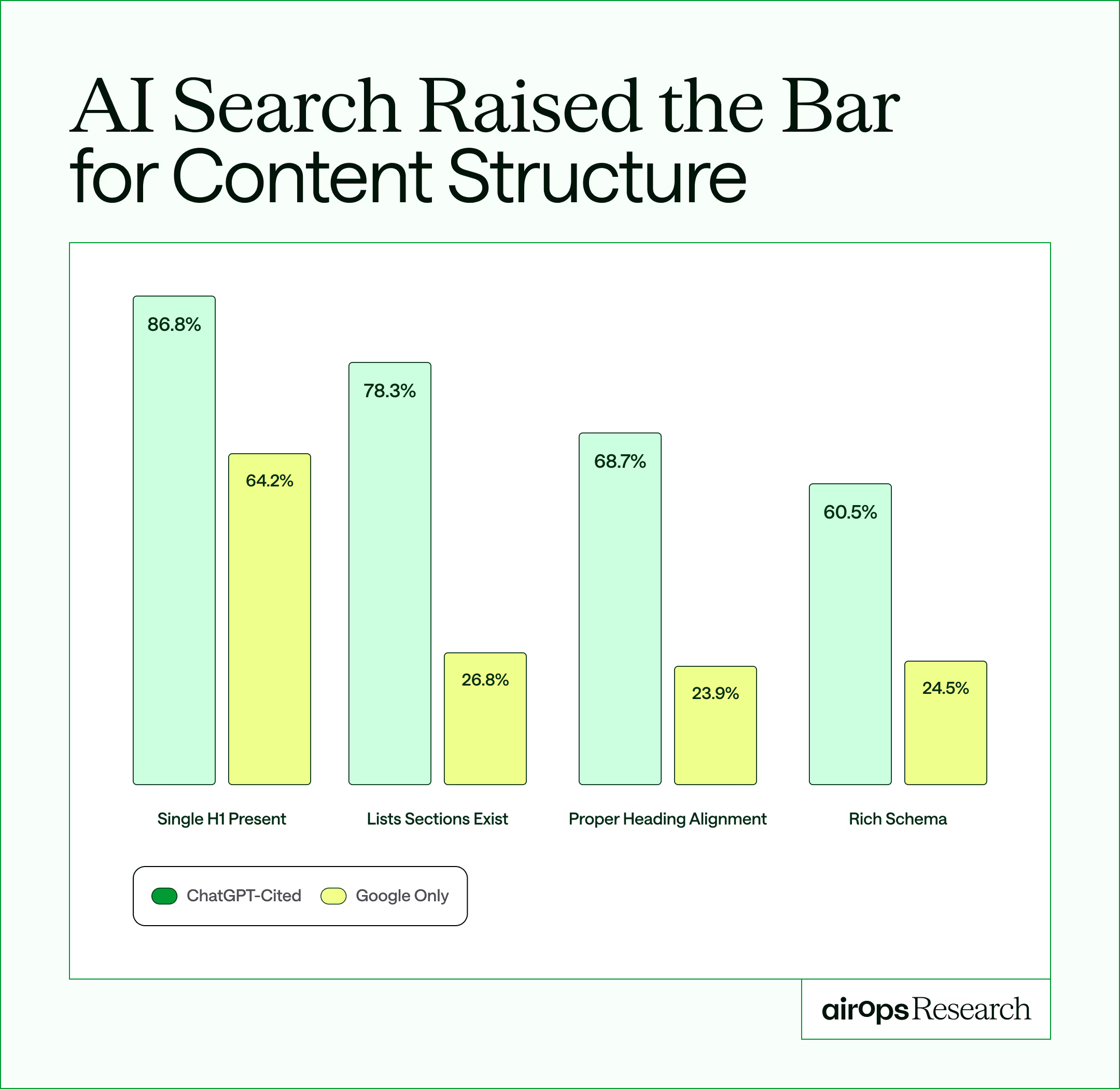

Beyond sentence-level phrasing, these techniques reinforce extractability at the page level and help AI systems identify your content as a reliable source. Pages with clean structure—clear headings, consistent formatting, and relevant schema—have been shown to earn 2.8× higher AI citation rates than poorly structured pages.

Content structuring for AI parsing

Format your content so AI can easily extract and cite information:

- Front-load definitions and key points at the start of sections

- Use clear headers that signal topic scope

- Write paragraphs that stand alone as complete references

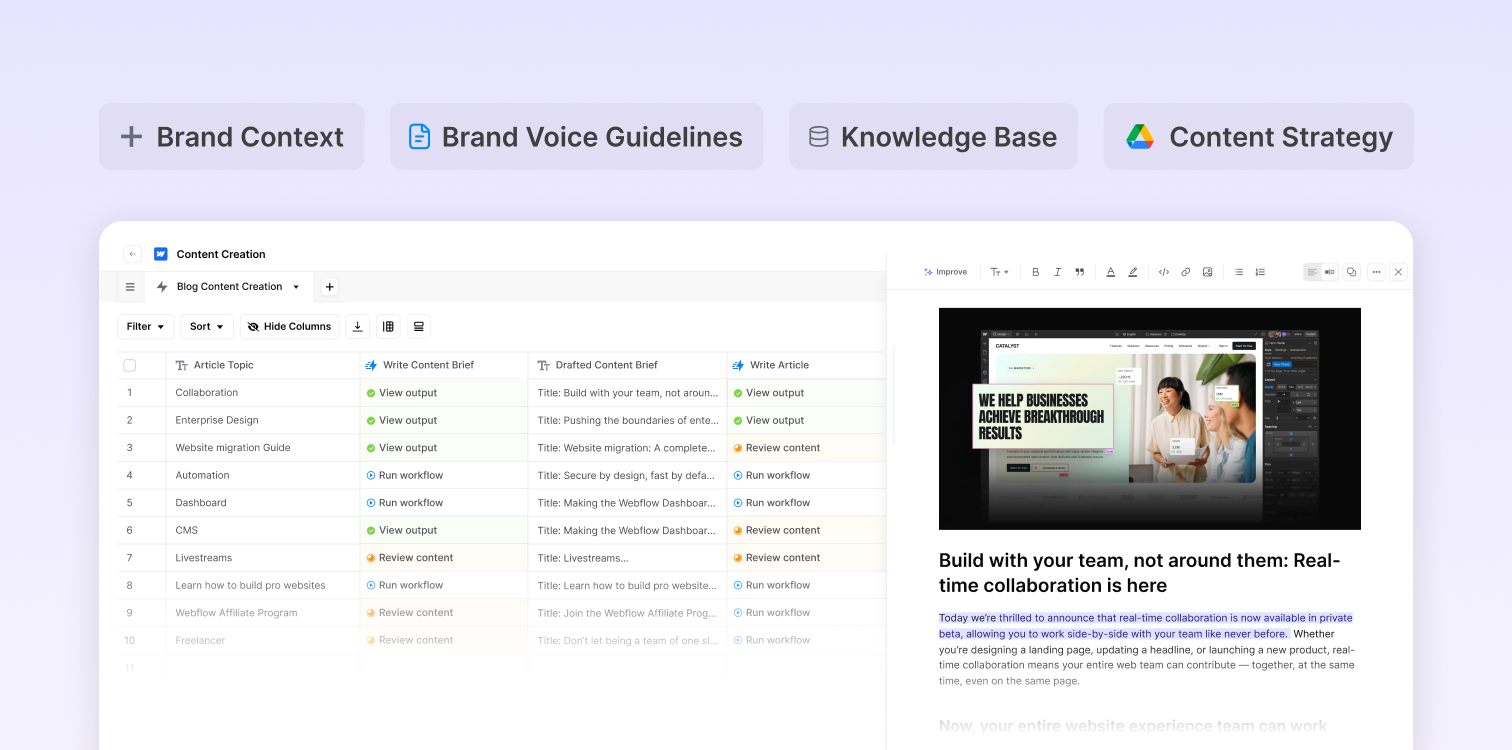

Applying these standards consistently across dozens or hundreds of pages is difficult without shared systems. Content teams increasingly rely on structured creation workflows to enforce clear headers, front-loaded definitions, and extractable passages during production—not after the fact. AirOps supports this approach by embedding structure and brand guardrails directly into content creation, helping teams ship pages that are citation-ready from the start.

Use question-and-answer blocks intentionally

Q&A formats work best when they mirror real user prompts. Each answer should remain complete without surrounding context.

Avoid filler introductions and get straight to the answer.

Apply schema where it clarifies meaning

Structured data like FAQ schema and Article schema help systems classify content, though schema alone does not guarantee citations. Use it to reinforce clarity, not replace strong writing.

How to optimize your website for LLMs

Your website's technical setup directly affects whether AI systems can access and cite your content.

Technical requirements for AI crawlability

- Static HTML: Ensure core content appears in the initial HTML, since AI crawlers may not execute JavaScript reliably

- Clean URL structures: Descriptive, logical URLs help AI understand page topics

- Fast load times: Slow pages may be deprioritized by crawlers

- AI bot access: Confirm relevant AI crawlers are not blocked in robots.txt

Content formatting for citation likelihood

Specific formatting practices increase citation probability. Create standalone, quotable passages. Provide clear definitions for key terms. Write fact-based sentences that can be lifted cleanly as sources.

Internal linking for topical authority

A strong internal linking structure connecting related content demonstrates topical expertise. AI systems recognize sites with comprehensive coverage of a subject as more authoritative than sites with scattered, unconnected pages.

Offsite signals that reinforce LLM trust

LLMs draw from broad datasets. Consistent brand mentions across trusted sources increase confidence.

- Industry publications

- Research reports

- Wikipedia and reference sites

- Earned media and expert quotes

Digital PR still matters, but its impact now extends beyond referral traffic into AI citation probability.

Visibility in AI search is rarely permanent. Brands that earn both direct citations and broader mentions across trusted third-party sources are 40% more likely to resurface across repeated AI answer runs than citation-only brands. Mentions reinforce authority signals even when a specific page is not quoted, helping stabilize long-term visibility.

How to measure LLM optimization success

Traditional metrics like rank and traffic don't tell the whole story. LLM optimization requires new measurement approaches.

Industry leaders are already seeing this shift in practice, especially for informational content surfaced through AI answers. Lily Ray, SEO Director at Amsive Digital, explained it well in a recent AirOps webinar:

“If your main metric is clicks and traffic, it’s going to be a really hard time. We’re not going to see the levels of traffic we saw in 2022 for many sites—especially upper-funnel informational content that AI can answer quickly.” — Lily Ray

LLM visibility metrics that matter

- Citation frequency: How often AI engines cite your content for relevant queries

- Brand mention accuracy: Whether AI accurately represents your brand and key messages

- Query coverage: The range of relevant questions where your brand appears as a source

These metrics matter because AI systems do not respond to a single query in isolation. As discussed in AirOps’ webinar on query fan-out, AI answers often trigger dozens of related searches behind the scenes to assemble a response. That fan-out behavior means visibility depends on how well your content covers related phrasing, adjacent questions, and supporting concepts—not just one primary query. Measuring citation frequency and query coverage helps reveal whether your content survives that expansion or gets replaced as AI systems synthesize answers.

Freshness is a visibility signal, not a maintenance task

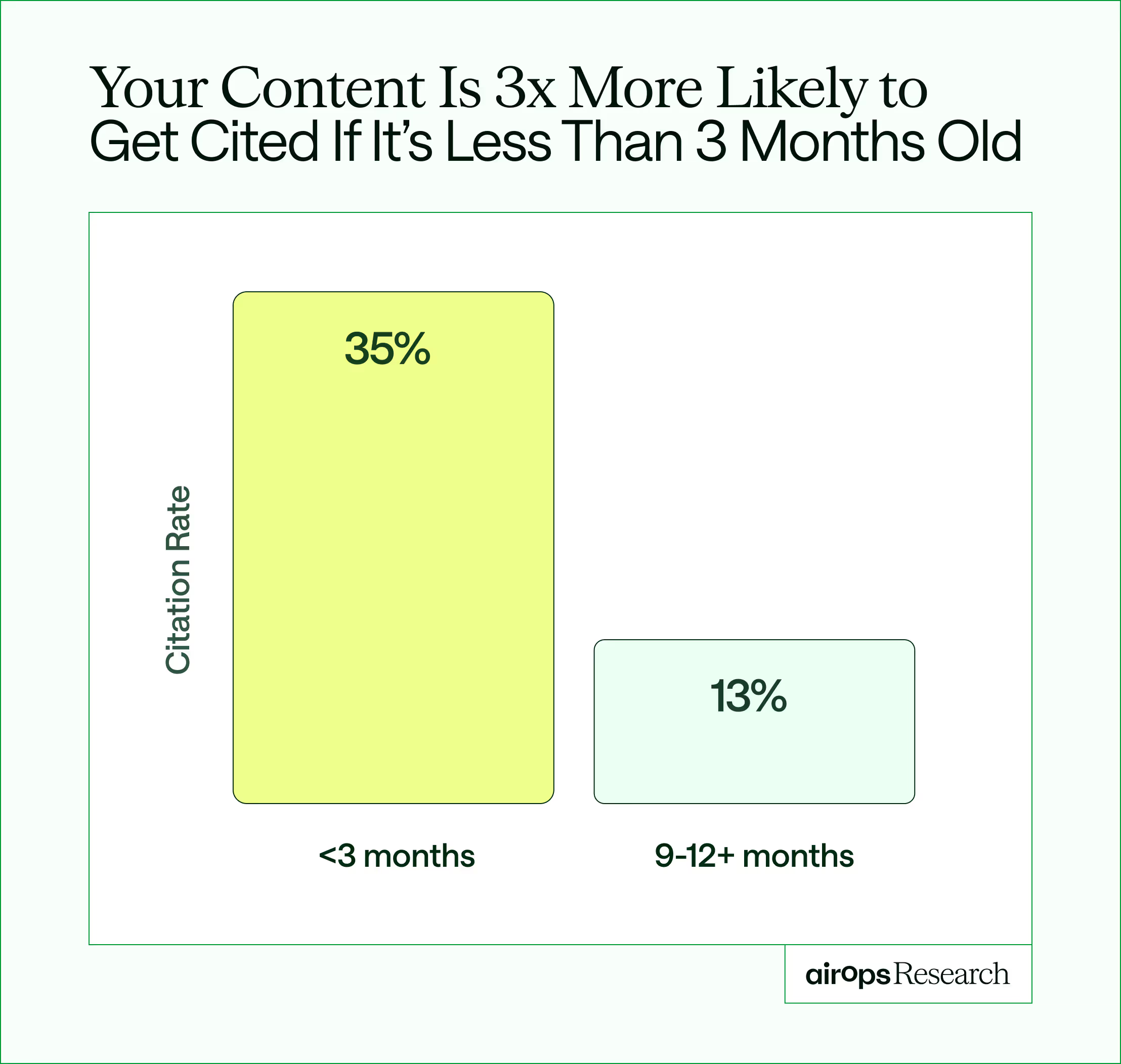

LLM optimization is not a one-time effort. Pages that are not updated on a quarterly basis are 3x more likely to lose AI citations than recently refreshed pages. As AI systems continuously re-evaluate sources, stale content becomes less reliable, even if it once performed well.

Treat content freshness as a visibility control rather than a maintenance task. Regular, substantive updates help preserve citation eligibility and reduce the risk of gradual disappearance from AI answers.

Maintaining that cadence is often where teams struggle. Refreshing content at scale requires knowing what to update, why it matters, and when visibility starts to slip. AirOps supports this by connecting AI visibility data to content refresh workflows, helping teams prioritize updates based on real citation loss rather than arbitrary timelines.

Track visibility directly

Run consistent prompts in ChatGPT, Perplexity, and Gemini to observe how and when your brand appears in AI-generated answers. Manual prompting helps establish an initial baseline for citation patterns, coverage gaps, and early visibility signals.

Over time, teams often formalize this process by tracking how frequently their brand is cited, which queries trigger those citations, and where visibility drops as AI answers change from run to run. This operational view makes it easier to prioritize updates based on real exposure rather than assumptions.

Specialized platforms can automate this tracking at scale. AirOps, for example, monitors AI search visibility over time and shows where brands gain or lose citations as answers evolve.

Agentic traffic attribution

Agentic traffic refers to website visits originating from AI-assisted browsing or clicks on citations within AI responses. You can identify agentic traffic by analyzing referral strings and using UTM parameters in URLs likely to be scraped and cited.

LLM optimization best practices

Here's a practical checklist for getting started:

- Audit your current LLM visibility: Query major AI answer engines with your most important brand-relevant questions to establish a baseline

- Create authoritative and unique content: Prioritize original research, expert opinions, and data that AI can't replicate from other sources

- Structure content for AI consumption: Apply formatting best practices including clear headers, standalone facts, Q&A formats, and relevant schema markup

- Build consistent brand mentions across the web: Expand your presence on authoritative third-party sites to reinforce credibility signals

- Monitor and iterate continuously: Establish a regular cadence for monitoring visibility and adjust based on results

A clearer path to AI search visibility

LLM optimization favors content that states ideas clearly, defines terms early, and removes ambiguity. As AI answers replace lists of links, brands that write for extractability earn more visibility without abandoning SEO fundamentals.

The goal is not to optimize for one system or another. It is to create content that performs across search results and AI-generated answers, then support that content with systems that make visibility measurable and improvable over time. AirOps supports this shift by showing where your content appears in AI search, where it gets overlooked, and what to prioritize next—so clarity, structure, and freshness drive sustained visibility over time.

Book a demo to see how AirOps helps teams earn more AI citations and brand mentions at scale.

Win AI Search.

Increase brand visibility across AI search and Google with the only platform taking you from insights to action.

FAQs

Get the latest on AI content & marketing

Get the latest in growth and AI workflows delivered to your inbox each week

.avif)