How to Test Content Visibility in Perplexity and ChatGPT

- Google rankings stopped predicting visibility inside ChatGPT and Perplexity

- Each AI platform pulled from different indexes and returned different sources

- Running the same queries across sessions revealed which pages AI tools trusted

- Brand mentions shaped perception, while live citations produced measurable traffic

- Citation gaps exposed weaknesses in authority, structure, and topical depth

Your content can rank on page one of Google and still stay invisible in ChatGPT and Perplexity.

AI tools pull from different sources, apply different citation rules, and refresh on different timelines than traditional search engines, so rankings no longer predict visibility.

This guide shows how to test whether Perplexity and ChatGPT mention, cite, or ignore your content, and how to turn those findings into next steps you can act on.

Why AI Search visibility testing matters

Testing content visibility in AI search means asking real questions inside tools like ChatGPT and Perplexity, then tracking whether your brand appears and how it appears. You can run these tests manually or automate them at scale with monitoring tools.

AI search visibility answers one core question: Do AI tools surface your content when users ask about topics you own?

Visibility also changes fast. In the 2026 State of AI Search, only 30% of brands stayed visible from one answer to the next, and only 20% remained visible across five consecutive runs. That volatility explains why one-time checks mislead. Real insight only appears when you repeat the same queries over time and track movement, not snapshots.

“SEOs must rethink how they measure success — AI overviews change what visibility looks like.” — Aleyda Solis

That shift in measurement explains why teams now test AI answers directly instead of relying on rankings as a proxy for visibility. As AI summaries replace traditional result pages, the mechanics of visibility change as well — from link position to how clearly your content answers the question inside the model’s response.

When your brand fails to appear in AI answers, you disappear from a fast-growing discovery channel. That loss rarely shows up in Google Search Console or rank trackers.

Why this matters:

- Brand perception: AI answers often create the first impression of your company

- Traffic quality: Citations send readers straight to your site

- Competitive insight: Testing shows where competitors appear and you don’t

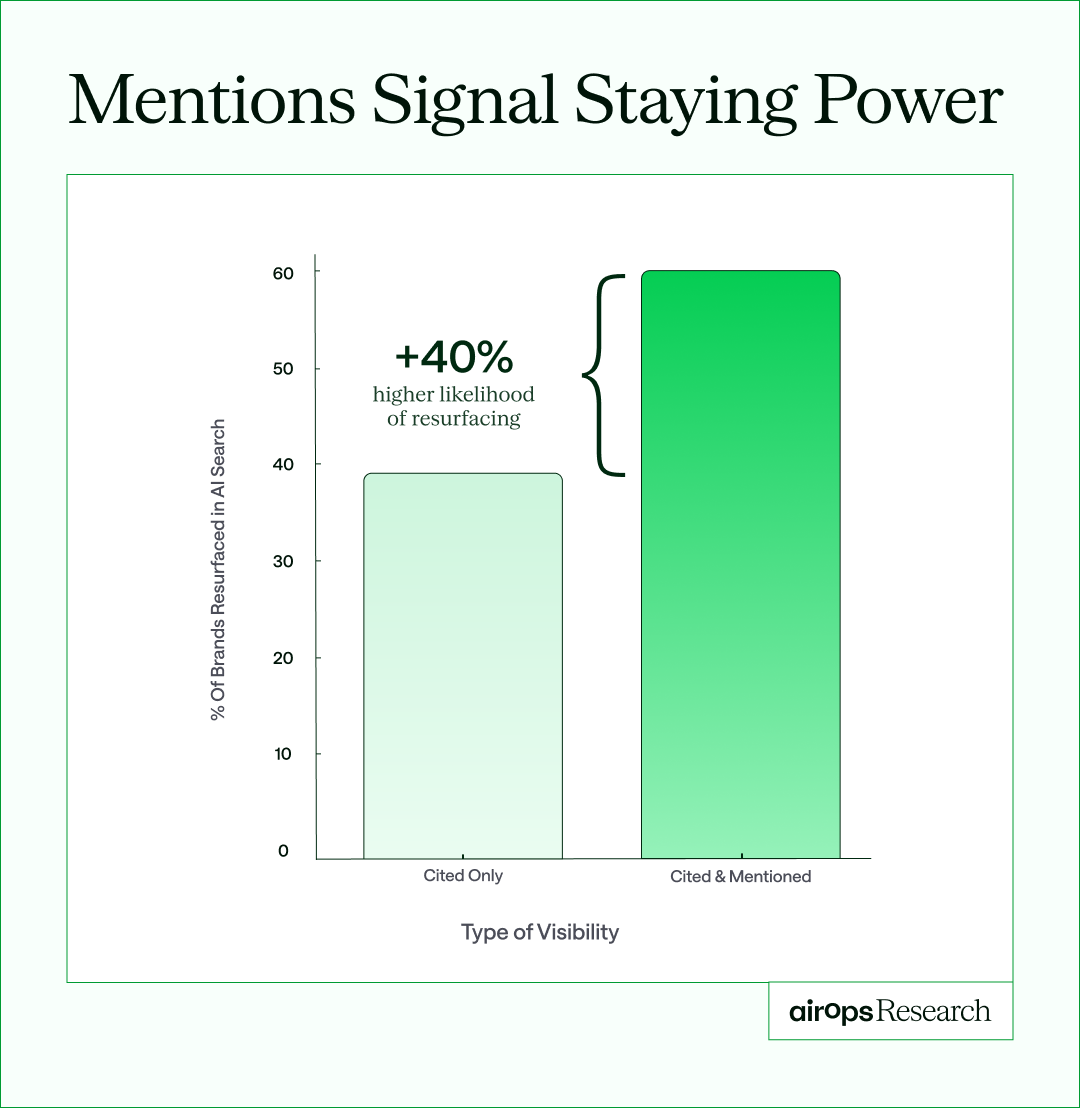

Visibility stabilizes when brands earn both mentions and citations. Brands that showed up with both signals were 40% more likely to resurface across multiple runs than citation-only brands, which makes mention tracking as important as citation tracking.

That stability often comes from consistent third-party references across reviews, community threads, and editorial coverage rather than from your own site alone.

How ChatGPT and Perplexity surface content differently

Each platform retrieves and presents information in its own way. Those differences change how you read test results.

How ChatGPT selects and cites sources

ChatGPT pulls from Bing’s index and its training data. When browsing is on, it accesses live web results. Free and Plus versions behave differently. Plus users often see fresher answers and more links.

ChatGPT citation behavior changes from query to query. Some answers show inline links, some show footnotes, and others show no sources at all. That inconsistency makes repeat testing a requirement, not a nice-to-have.

How Perplexity retrieves and displays results

Perplexity works as a search-first AI. It always shows a sources panel and crawls the web in near real time by default. Every response includes a numbered list of domains that shape the answer.

This transparency makes Perplexity easier to test because you can see which pages influence the output.

Key differences that affect testing

ChatGPT and Perplexity do not surface information in the same way. These platform differences shape how you interpret results and why you need to test both tools instead of treating them as interchangeable.

- Source display: ChatGPT sometimes shows citations and sometimes shows none. Perplexity always includes a sources panel with the domains used to generate the answer.

- Real-time search: ChatGPT only pulls live results when browsing is on. Perplexity runs a real-time search by default.

- Index source: ChatGPT relies on Bing plus its training data. Perplexity blends multiple search indexes.

- Citation format: ChatGPT uses inline links or footnotes when it cites. Perplexity uses a numbered source list tied directly to the answer.

How to test your content visibility in ChatGPT

The process stays simple, but consistency shapes the quality of your results.

1. Open a new ChatGPT session

Start with a clean session to avoid prior context shaping responses. Use incognito mode or log out entirely to establish a baseline.

2. Enter brand and product queries

Ask direct questions such as:

- “What is Webflow?”

- “What does Ramp do?”

- “What is Carta used for?”

Record whether ChatGPT mentions your brand and whether the description matches your positioning.

.png)

3. Test informational and comparison queries

Ask questions your buyers ask before they know your brand:

- “What are the best tools for corporate card management?”

- “How does Ramp compare to Brex?”

These queries show whether ChatGPT recommends you alongside competitors or skips you.

.png)

4. Document mentions, citations, and links

Capture each response and note whether it includes:

- A direct link to your content

- A brand mention without a link

- No mention at all

Mentions build awareness, and citations drive traffic, so track both.

5. Repeat across multiple sessions

Results change from day to day. Run the same queries across multiple sessions over one to two weeks, then review the responses together to surface trends.

How to test your content visibility in Perplexity

Perplexity uses a similar process, but its source signals are clearer.

1. Use Perplexity’s search interface

Go to perplexity.ai and enter your query. You don't need an account for basic testing.

.png)

2. Run brand and topic queries

Use the same queries you tested in ChatGPT to keep comparisons clean.

.png)

3. Review the sources panel

Check whether your domain appears in the list. Sources near the top usually shape the answer more than those listed last.

.png)

4. Compare free and Pro results

Perplexity Pro runs on different models and may surface different sources. If you have access, test both versions to spot gaps.

5. Record citation URLs and context

Log the exact URLs cited, not only the domain. Note how Perplexity uses the content and which formats show up most often.

Types of queries to test for AI Search visibility

Different query types surface different signals. Organize tests by intent.

- Brand queries: “What is Webflow?”

- Product queries: “What features does Ramp include?”

- Problem-solution queries: “How do I manage corporate card spend?”

- Comparison queries: “Alternatives to Carta”

- Long-tail informational queries: Narrow questions tied to your expertise

Brand queries test recognition. Comparison queries test positioning. Long-tail queries test topical depth.

Freshness matters most for high-intent queries. For commercial searches, about 83% of AI citations came from pages updated within the past 12 months, and over 60 percent from pages refreshed within the last 6 months. Product, pricing, and comparison pages need the tightest refresh cycles if you want them to keep surfacing.

AI visibility testing tools

Teams test AI search visibility in three main ways.

Manual query testing

Manual testing works well for audits and spot checks. It costs nothing but time. As query lists grow, the effort adds up fast.

Third-party monitoring platforms

Tools like AirOps help monitor AI search mentions at scale. These platforms automate query testing across multiple AI tools and track citation changes over time.

Custom tracking spreadsheets

Spreadsheets still work for lean teams. Track:

- Query

- Platform

- Date

- Mentioned (yes or no)

- Cited with link (yes or no)

- Citation URL

- Notes

How to track AI visibility over time

One-time testing shows a snapshot. Ongoing tracking reveals direction.

Create a standardized query list

Build a fixed set of high-value queries and reuse it every cycle. Consistency matters more than volume.

Establish a testing cadence

Weekly or bi-weekly testing works well for most teams. Monthly checks serve as a minimum.

Pages that teams did not refresh quarterly were 3× more likely to lose AI citations than recently updated pages. Use that benchmark to shape your testing rhythm and flag pages that need attention before they disappear.

Centralize results

Store results in one shared doc or dashboard so patterns become obvious across time.

Analyze trends and gaps

Look for repeat issues:

- Pages that never get cited

- Competitors that appear consistently

- Query types where visibility improves after updates

What to do when your content doesn't appear in AI Search

When testing reveals visibility gaps, you have several options for improvement.

- Audit authority signals: Check if your content includes author credentials, citations to external sources, and clear expertise indicators.

- Improve structure and answer readiness: Pages with clean structure earn results. Pages that use clear headings and schema show 2.8× higher citation rates than poorly structured pages. Add direct answers to common questions near the top, define key terms early, and use schema markup to clarify page intent for AI systems.

- Strengthen topical clusters and internal links: Build related content around core topics. Link between pages to signal depth and relevance. Isolated pages perform worse than interconnected content clusters because referring domains predict ChatGPT citations more than any other factor.

- Monitor changes after updates: After making improvements, re-test the same queries. Changes may take days or weeks to appear in AI responses, so patience is important. Track whether visibility improves over time and note which changes had the biggest impact.

Key takeaways

- AI search now shapes how buyers discover brands

- Regular testing reveals how tools interpret your content and where competitors win attention

- Clear answers, visible expertise, and connected topics drive stronger AI visibility

Want to monitor your visibility in ChatGPT, Perplexity and in AI mode? Try AirOps.

AirOps helps you understand how your brand shows up across ChatGPT, Perplexity, and AI search, so you can track visibility with confidence. Our Insights layer goes beyond traditional rankings to show when your content is being cited, where you are gaining or losing authority, and what to prioritize next. Instead of static dashboards, AirOps turns AI search visibility into clear, actionable signals that help teams improve content quality and stay competitive.

Turn AI answers into a measurable channel

AI search now shapes how buyers discover and evaluate brands. Teams that test visibility consistently gain a clear view of how tools like ChatGPT and Perplexity understand their content and where competitors earn attention.

AirOps centralizes mentions, citations, and gaps across AI tools so teams can move from observation to action without manual checks and focus on the updates that actually change outcomes.

Book a demo to see how AirOps helps teams track AI search visibility, identify gaps, and earn more citations and mentions at scale.

Win AI Search.

Increase brand visibility across AI search and Google with the only platform taking you from insights to action.

FAQs

How often do ChatGPT and Perplexity update their indexes with new content?

ChatGPT's browsing feature pulls from Bing's index which updates continuously, but training data refreshes happen less frequently during major model updates. Perplexity crawls the web in near real-time by default, meaning new content can appear in results within hours to days of publication rather than weeks.

Can I block my content from appearing in AI search results?

Yes, you can use robots.txt directives to block specific AI crawlers like GPTBot (OpenAI) and PerplexityBot, though this prevents your content from being cited entirely. Consider whether blocking serves your goals, since visibility in AI search increasingly drives discovery and traffic.

Does content length impact whether AI tools cite my pages?

Content length alone does not determine citation likelihood, but comprehensive pages that thoroughly answer questions tend to perform better than thin content. AI tools favor pages that provide complete, well-structured answers with clear expertise signals over lengthy but unfocused articles.

Get the latest on AI content & marketing

Get the latest in growth and AI workflows delivered to your inbox each week

.avif)

.png)