Context Engineering vs Prompt Engineering: How AI Systems Evolved

- Prompt engineering controls how a model responds to a single task, from format to tone

- Context engineering governs what the model can see, remember, and act on before it answers

- AI agents and multi-step systems depend on memory, retrieval, and tool access to stay coherent

- Teams get dependable results when they treat prompts as one layer inside a broader system

Prompt engineering focuses on the words inside a single request. Context engineering shapes everything that surrounds those words.

That distinction changes how teams build AI systems that hold up beyond experiments.

This guide explains how prompt engineering and context engineering differ, where each approach fits, and how teams combine them as AI products grow past one-off use.

What is prompt engineering?

Prompt engineering means writing clear, specific text inputs to get desired outputs from a large language model (LLM). Each request stands alone: you ask a question, the model responds, and the interaction ends.

Common prompt techniques include:

- Role-playing: Assigning the AI a specific persona, like "You are a senior marketing strategist."

- Few-shot examples: Providing sample input-output pairs within the prompt itself.

- Output structure: Define the format the model should return.

Prompt engineering fits single-turn tasks like:

- Summarizing a document

- Writing an email

- Creating a short product description

Each prompt lives in isolation. The model carries no memory across requests and sees only the text inside that one input.

What is context engineering?

Context engineering designs the full information environment around an LLM. Instead of shaping one request, it controls what the model can access when it generates a response.

Anthropic describes context engineering as “the natural progression of prompt engineering.” Prompts act as the question. Context engineering builds the library and the librarian that support the answer.

A context system usually includes:

- Retrieved knowledge (RAG): External data pulled in dynamically at query time

- Memory: Conversation history and user preferences across sessions

- Tools: External actions the AI can take, like searching databases or calling APIs

- System rules: Persistent instructions that govern behavior regardless of user input

Teams rely on context engineering for complex systems like support bots, research agents, and multi-step content creation.

Prompt engineering vs context engineering: key differences

The difference shows up in how much responsibility sits with the request versus the system.

How scope changes between prompts and context

With prompts, instructions stay fixed until someone edits them. Each new task requires fresh background text.

With context engineering, the system assembles information dynamically based on the task, the user, and past activity. The same question can produce different answers because the surrounding data changes.

“Summarize this document” works as a prompt. A coding assistant that recalls project structure or a support agent that tracks purchase history depends on retrieval, memory, and coordination across steps.

Why teams move from prompts to context

Simple prompts break down when teams try to scale:

- Long conversations lose coherence without memory

- Agents need access to tools and outside data

- Production apps need consistent outputs across thousands of sessions

- Manual tuning becomes a maintenance burden

A team might spend hours refining one prompt. That approach collapses when the system serves thousands of users each day. Context engineering replaces manual tweaks with reusable system design.

How context engineering and prompt engineering work together

Prompt engineering still matters. It acts as one layer inside a broader system.

Context engineering builds the environment. Prompt engineering tells the model how to use that environment.

A system might retrieve documents, load memory, and expose tools. The prompt then directs the model to analyze, compare, or act on that material. Remove either piece and the system struggles.

Neither approach works well in isolation. A perfect prompt without proper context lacks the information it requires. A rich context without clear instructions leaves the model guessing about what you actually want.

When to use prompt engineering vs context engineering

The easiest way to decide which approach to use is to look at how much state, data, and coordination the task requires.

Simple queries and one-time tasks

Use prompt engineering for tasks like:

- Drafting a short email

- Summarizing a report

- Answering a focused question

Complex agents and multi-step systems

Use context engineering when building agents that research topics, draft content, or coordinate actions across tools.

Content creation at scale

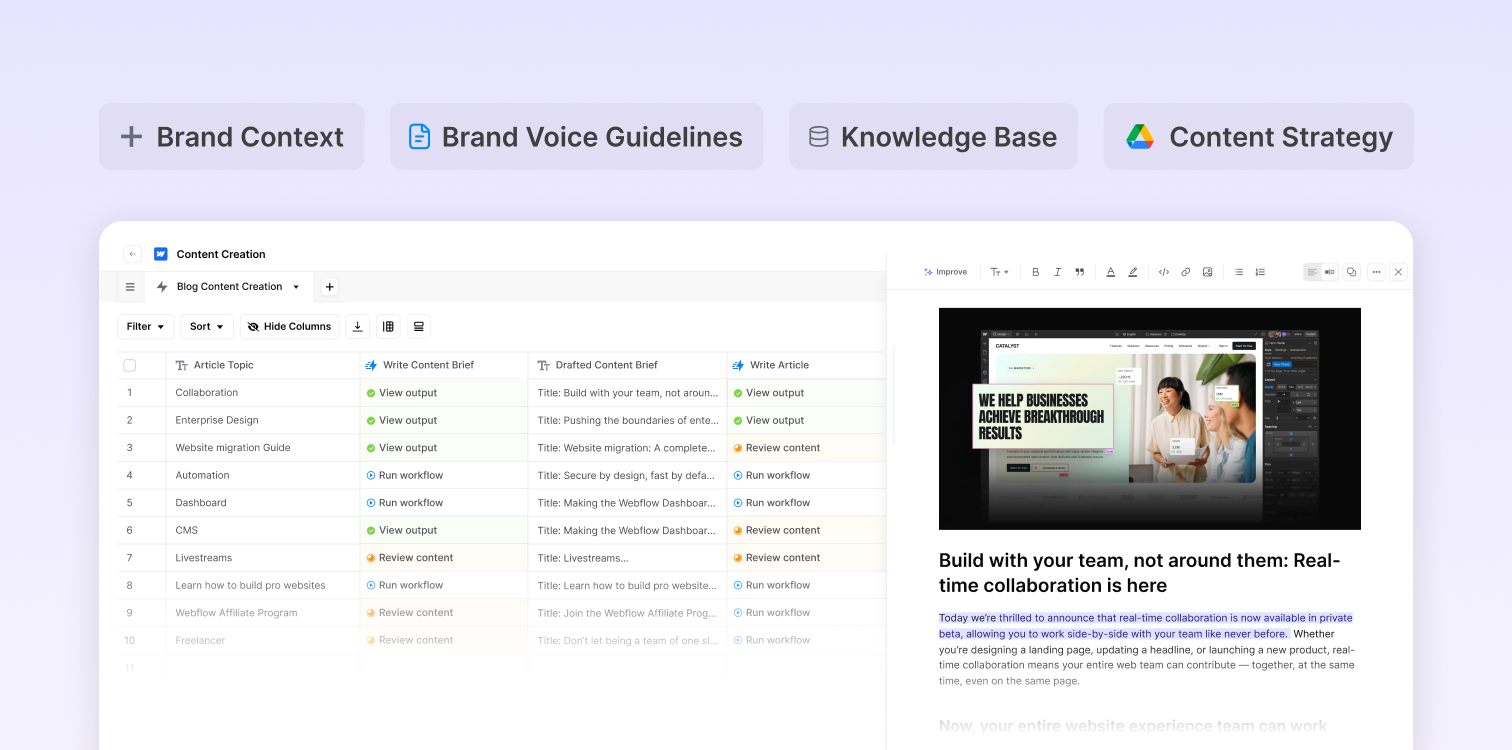

Teams that publish large volumes of on-brand content benefit from storing brand rules, data sources, and voice guidance in context rather than pasting them into every request.

Tip: If you keep copying the same background text into every prompt, move that material into your system context.

What context engineering looks like in practice

After teams stop pasting guidance into every request, they need a way to manage that context over time.

AirOps gives content teams a shared system for storing brand knowledge, structuring editorial guidance, and connecting live performance signals to creation, so context updates flow automatically into new content rather than living in scattered docs.

Instead of rewriting instructions for every piece of content, teams update a central layer once and apply it across their system.

Key components of context engineering for AI

Context engineering works when teams treat information as part of the system, not something they paste into each request. These components define what the model can see, remember, and act on before it ever generates an answer.

System rules

System rules define how the model behaves in every interaction. They set standards for tone, compliance, and formatting so responses stay consistent no matter what the user asks.

Retrieved knowledge

Retrieval-Augmented Generation (RAG) feeds the model fresh, brand-specific data such as product details, documentation, or editorial guidance. Structure plays a central role here.

AirOps research found that pages with clean structure — clear headings and schema — earned 2.8× higher citation rates than poorly structured pages. That lift comes from system design, not clever wording inside a single prompt.

Memory

Memory preserves conversation state and user history so the system stays coherent across sessions.

Tools and actions

Tools let the model search, fetch records, or trigger tasks instead of only generating text.

Best practices for context engineering implementation

1. Define clear task boundaries

Specify exactly what the AI can and cannot do. Clear boundaries prevent scope creep and improve reliability. An agent designed to answer product questions shouldn't suddenly start processing refunds unless you've explicitly enabled that capability.

2. Design modular context layers

Split system rules, retrieved data, and memory into separate layers. This structure makes it easier to update individual parts without breaking everything else and helps teams pinpoint failures when something drifts.

Teams that scale this approach focus less on clever abstractions and more on building reliable habits into their systems:

“Sometimes it’s the basic things that make the most impact, because this is something I do every day. There is no automation that I don’t touch myself before doing something with it.” — Maddy French

That mindset applies directly to context design. Small, well-defined layers with human review checkpoints create systems teams can trust and improve over time.

3. Prioritize information by relevance and recency

Position matters within the context window. Place the most important and recent information where the model attends to it most. Models pay more attention to information at the beginning and end of their context.

4. Manage context window limits strategically

Every model has a maximum context size. When information exceeds the limit, something gets cut. Choose what to include carefully, prioritizing information most relevant to the current task.

5. Test and iterate across edge cases

Context engineering requires ongoing testing. Check how the system performs with unusual inputs, long conversations, or missing data. Edge cases reveal weaknesses in your context design.

Common context engineering challenges

Even well-designed systems drift without ongoing attention. These issues show up quickly once teams move from prototypes to production.

Context limits

Every model caps how much information it can process. When the context overflows, the system drops important details first.

Information noise

Adding everything “just in case” reduces accuracy. Teams must filter ruthlessly so the system only receives what supports the current task.

Consistency across sessions

Decisions about what the system remembers shape how users experience it over time. AirOps analysis shows that 53.4% of pages cited by AI were refreshed within the last six months, and 35.2% within the last three months.

That cadence turns context into a living system. Without a refresh discipline, even well-designed context decays and stops surfacing in AI answers.

AirOps tracks where content loses visibility and triggers refresh workflows tied to real performance changes so context stays aligned with how AI systems surface answers.

Build systems that scale past single prompts

Clear prompts still matter. But teams hit a ceiling when every request must carry its own background rules, brand guidance, and reference material.

This shift marks the difference between experimenting with AI and running it in production.

AirOps helps content teams centralize brand knowledge, connect live data to creation, and maintain consistency across AI search and SEO — without rewriting the same guidance every time.

Book a demo to see how AirOps helps teams build context once and use it across every AI-powered content system.

What skills do I need to transition from prompt engineering to context engineering?

Context engineering requires understanding data architecture, API integrations, and system design alongside prompt crafting. You'll need familiarity with vector databases for retrieval, memory management patterns, and how to structure information hierarchies that models can navigate effectively.

How much does context engineering add to AI development costs?

Initial setup costs increase due to infrastructure for retrieval systems, memory storage, and tool integrations. However, teams typically see reduced long-term costs because they stop manually tuning individual prompts and can reuse context layers across multiple applications.

Can I retrofit context engineering into an existing prompt-based AI system?

Yes, most teams migrate incrementally by first extracting repeated prompt content into system rules, then adding retrieval for dynamic data, and finally implementing memory for session persistence. Start with the highest-friction prompts that require constant updates.

Win AI Search.

Increase brand visibility across AI search and Google with the only platform taking you from insights to action.

Get the latest on AI content & marketing

Get the latest in growth and AI workflows delivered to your inbox each week

.avif)